|

Ekaterina Lobacheva

I’m a deep learning researcher mainly focusing on understanding neural network training dynamics, representation learning, and generalization. I am also interested in ensemble and model-averaging methods, the geometry of loss landscapes, and how training shapes representations in different paradigms, including self-supervised, transfer, continual, and online learning.

I am currently a postdoctoral researcher at Mila and Université de Montréal, working in Sarath Chandar’s lab with support from IVADO. Previously, I earned a Specialist degree (BSc + MSc) from Lomonosov Moscow State University, completed a PhD in computer science at HSE University under the supervision of Dmitry Vetrov, and held a postdoctoral position at Mila with Nicolas Le Roux and Irina Rish.

Email /

CV /

Google Scholar /

Github /

Twitter

|

|

|

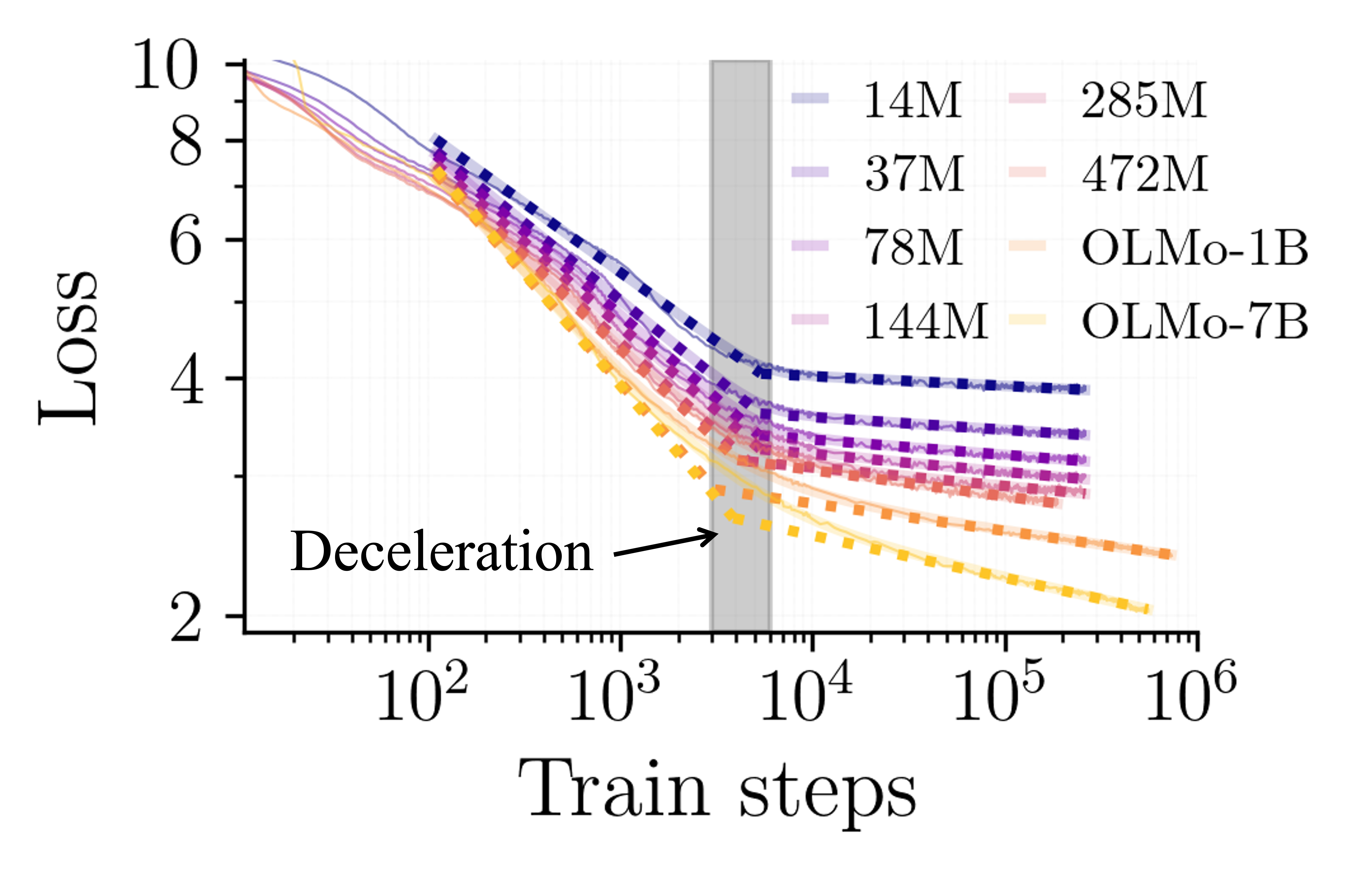

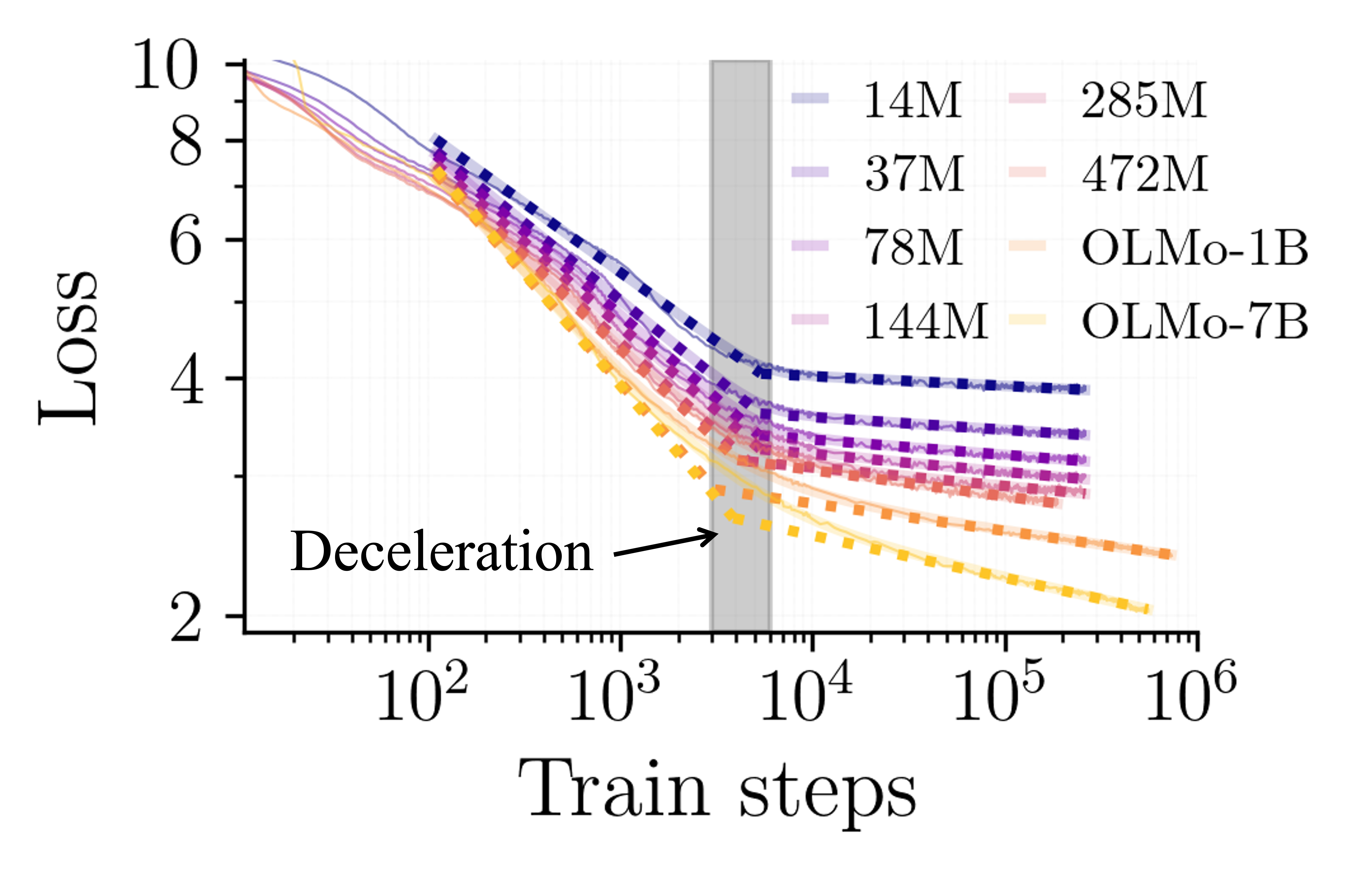

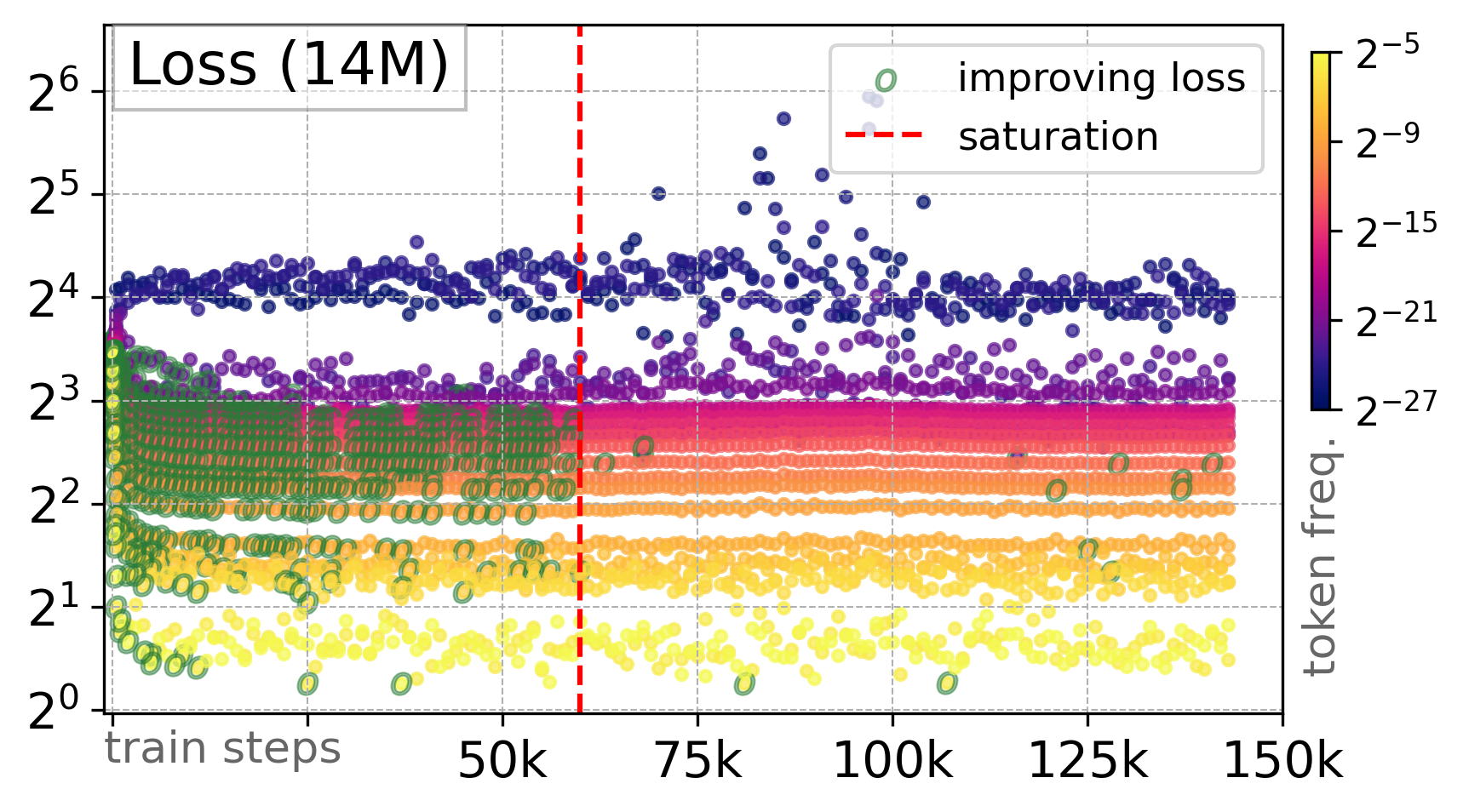

Training Dynamics Underlying Language Model Scaling Laws: Loss Deceleration and Zero-Sum Learning

Andrei Mircea,

Supriyo Chakraborty,

Nima Chitsazan,

Irina Rish,

Ekaterina Lobacheva

Annual Meeting of the Association for Computational Linguistics (ACL), 2025 (Oral)

Workshop on Scientific Methods for Understanding Deep Learning (SciForDL) at NeurIPS, 2024

project page

/

paper

/

code

/

short video

/

bibtex

|

|

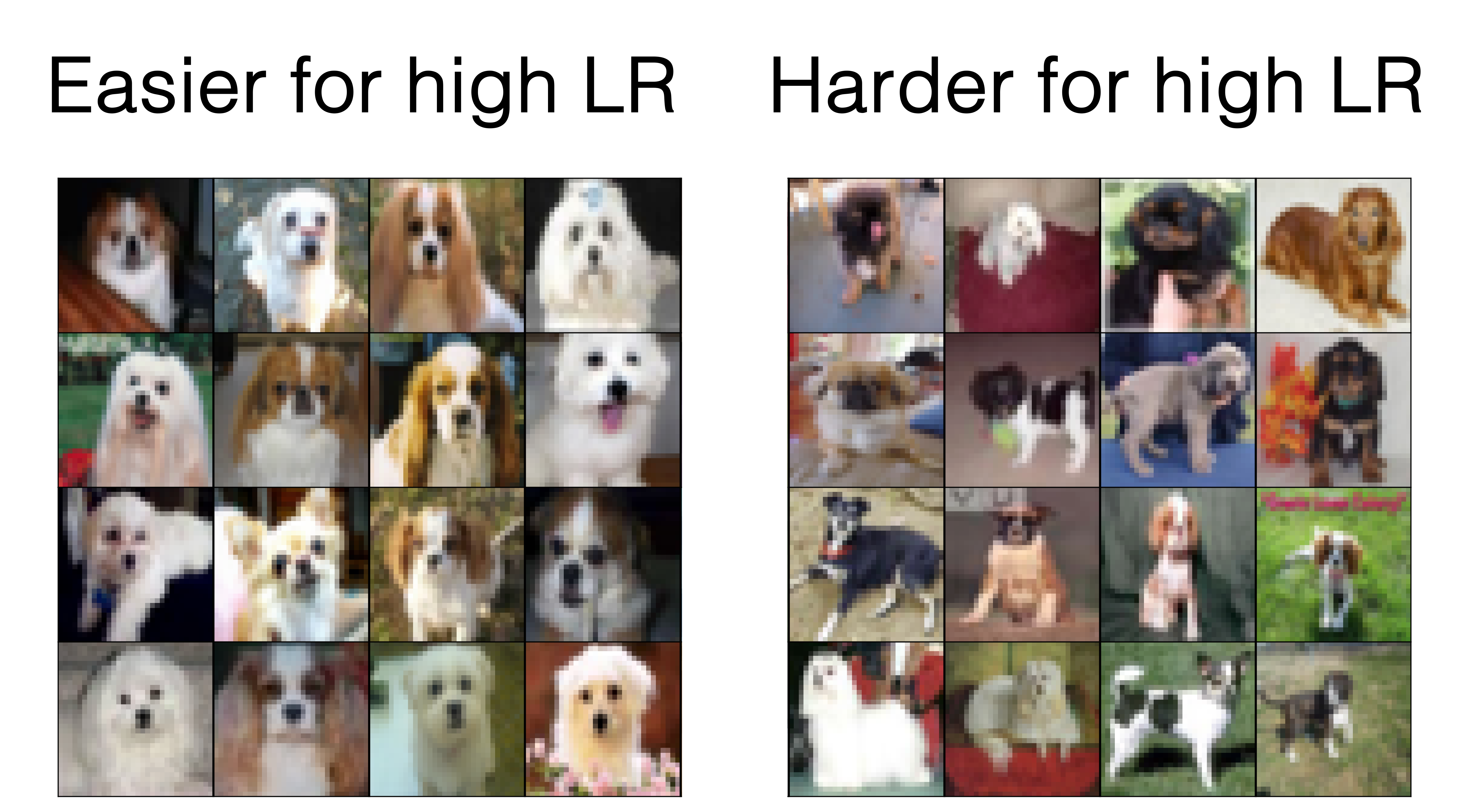

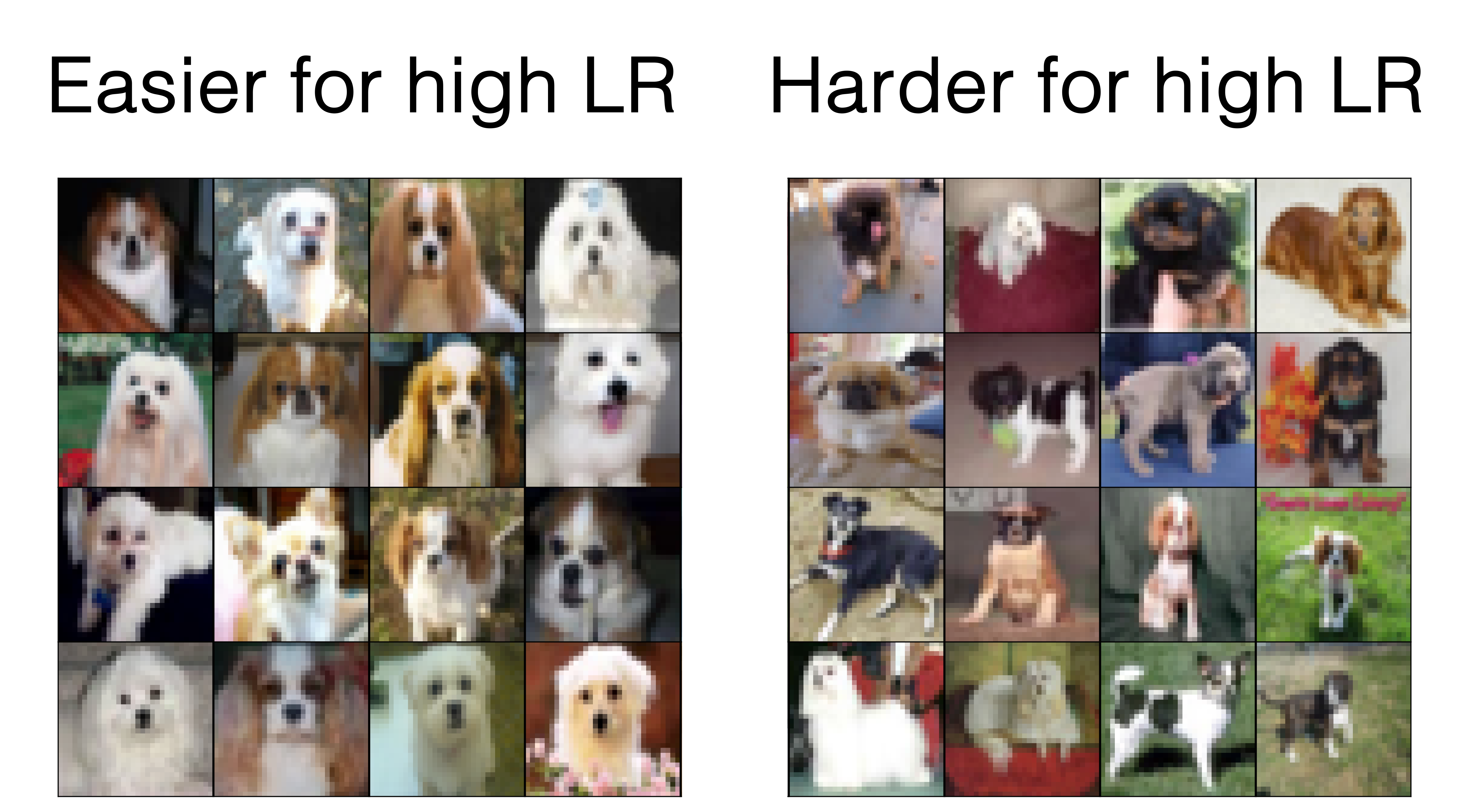

How Learning Rates Shape Neural Network Focus: Insights from Example Ranking

Ekaterina Lobacheva,

Keller Jordan,

Aristide Baratin,

Nicolas Le Roux

Workshop on Scientific Methods for Understanding Deep Learning (SciForDL) at NeurIPS, 2024

openreview

/

bibtex

|

|

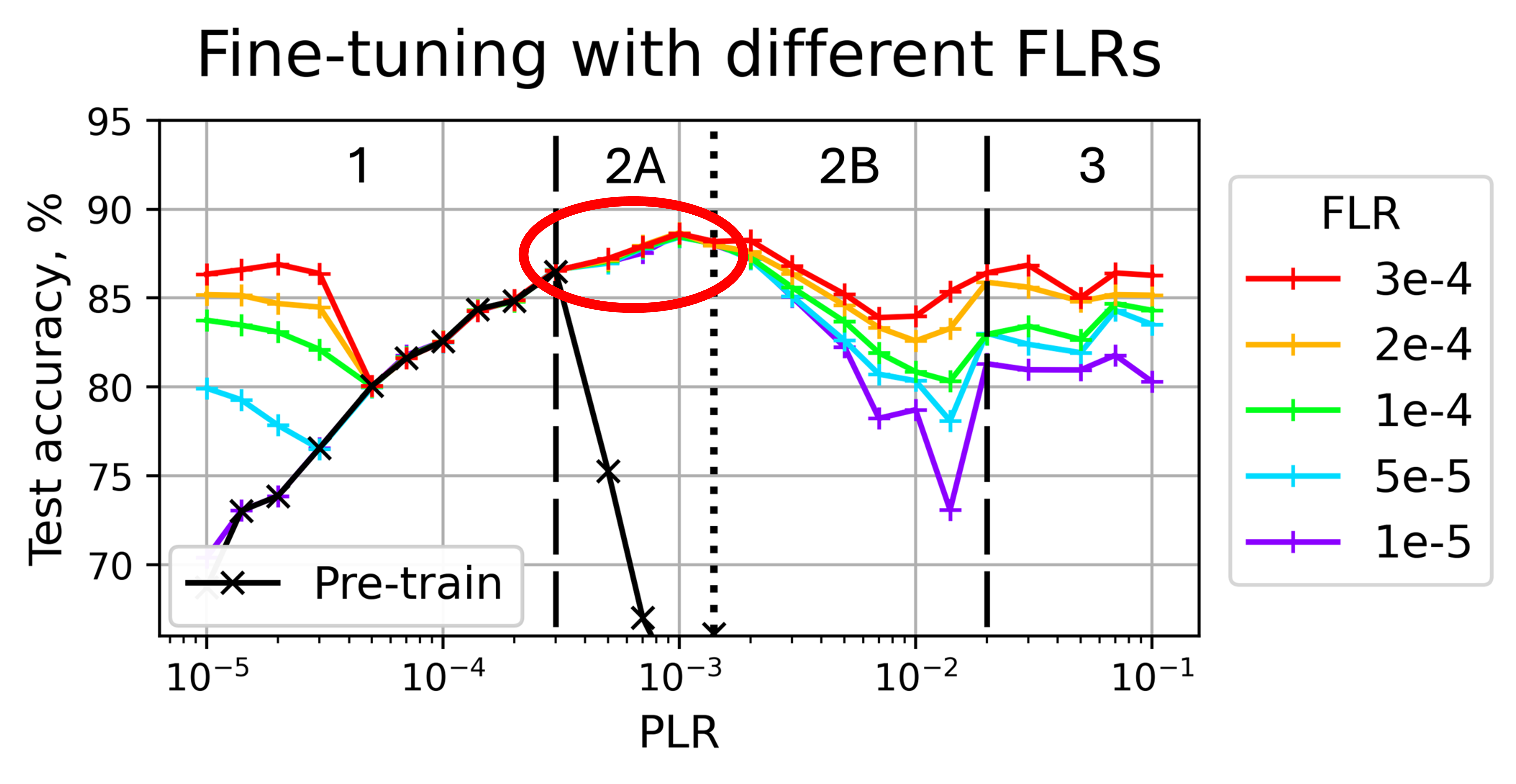

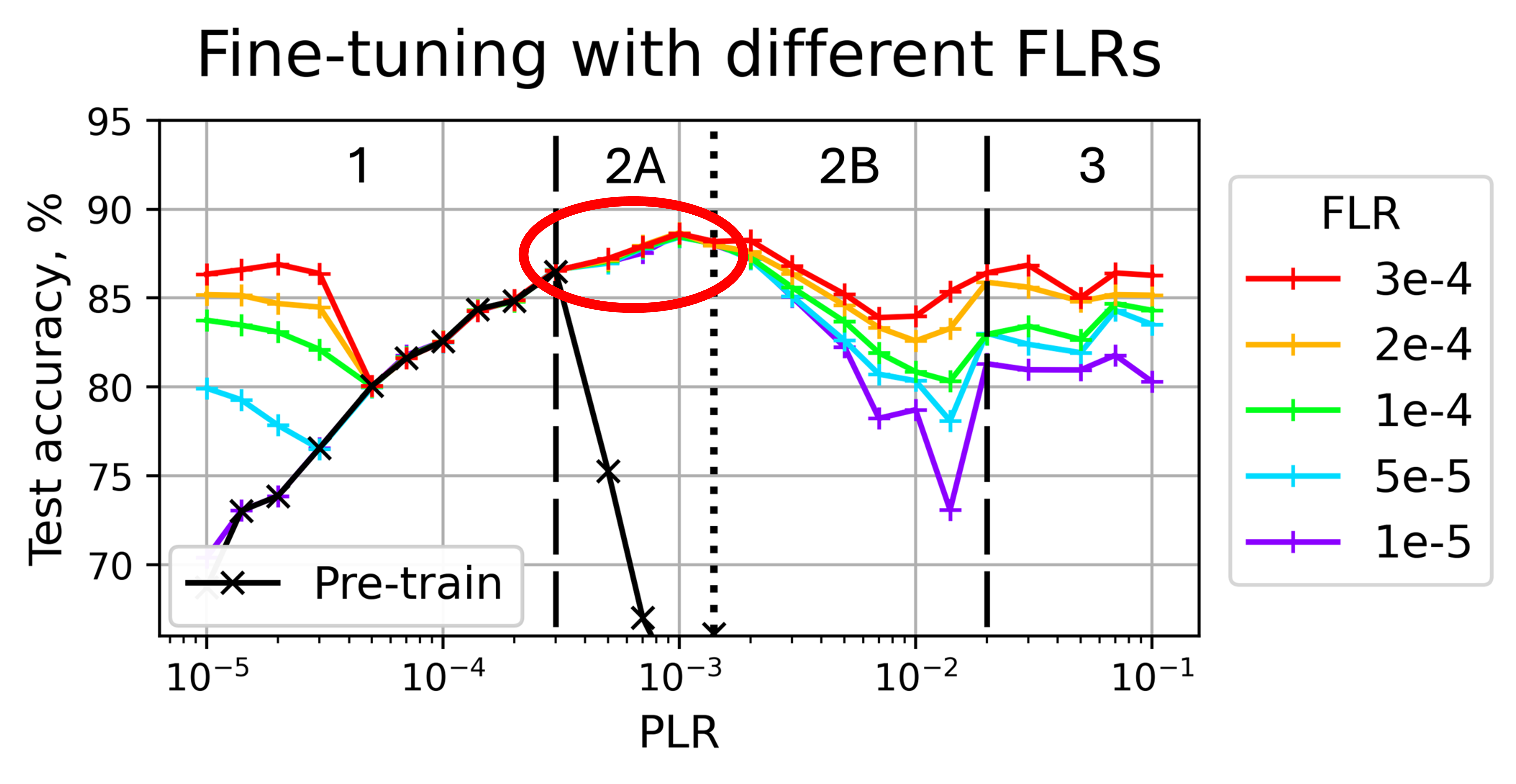

Where Do Large Learning Rates Lead Us?

Ildus Sadrtdinov*,

Maxim Kodryan*,

Eduard Pockonechnyy*,

Ekaterina Lobacheva†,

Dmitry Vetrov†

Neural Information Processing Systems (NeurIPS), 2024

Workshop on High-dimensional Learning Dynamics (HiLD) at ICML, 2024

Mathematics of Modern Machine Learning Workshop at NeurIPS, 2023

paper

/

openreview

/

bibtex

|

|

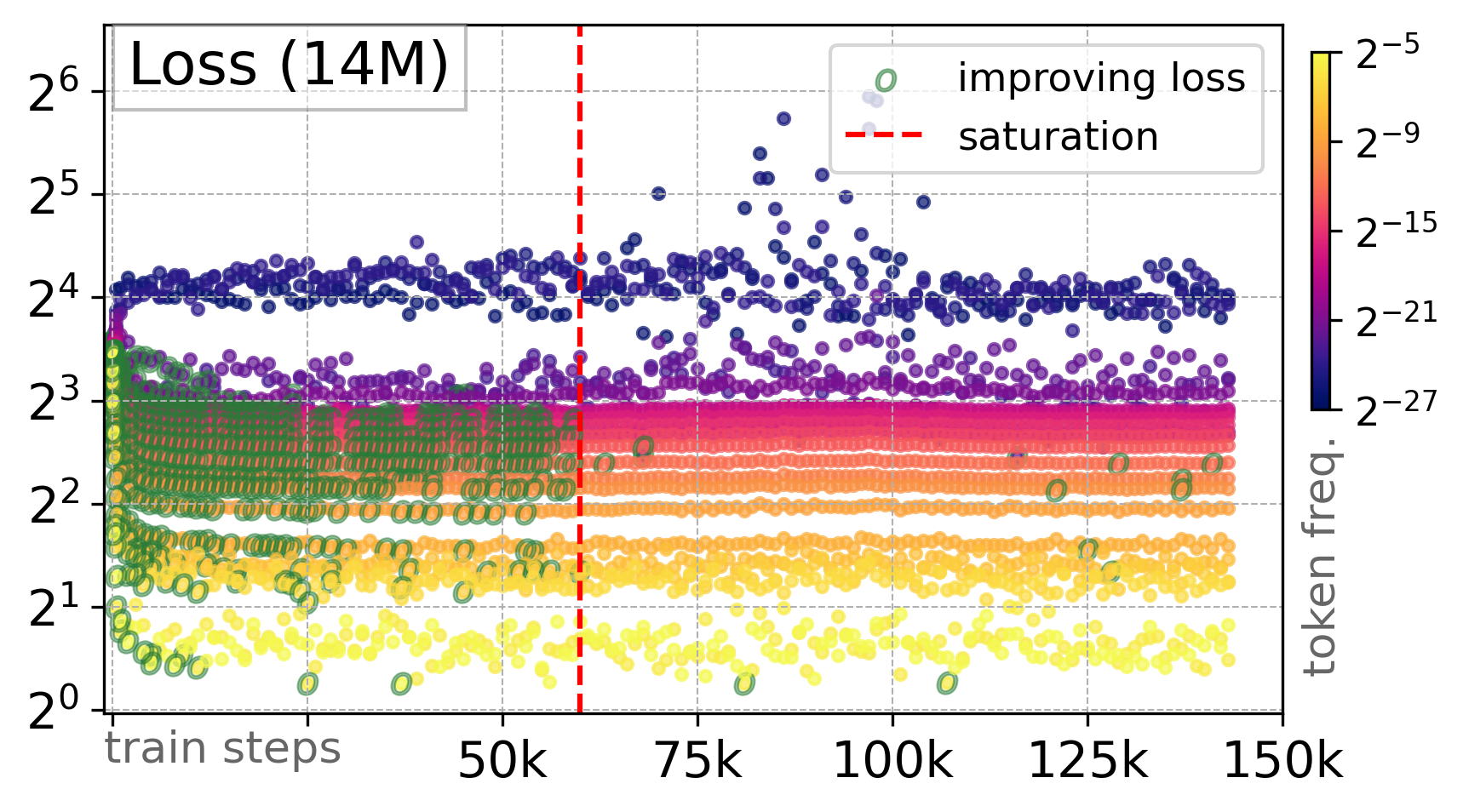

Gradient Dissent in Language Model Training and Saturation

Andrei Mircea,

Ekaterina Lobacheva,

Irina Rish

Workshop on High-dimensional Learning Dynamics (HiLD) at ICML, 2024

openreview

/

bibtex

|

|

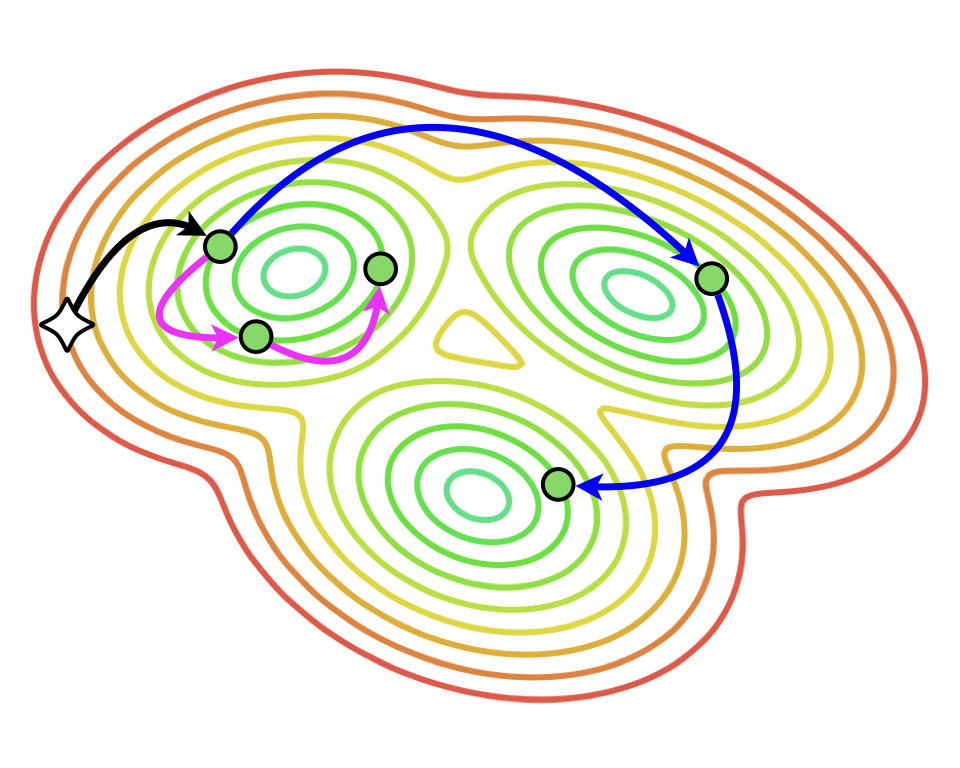

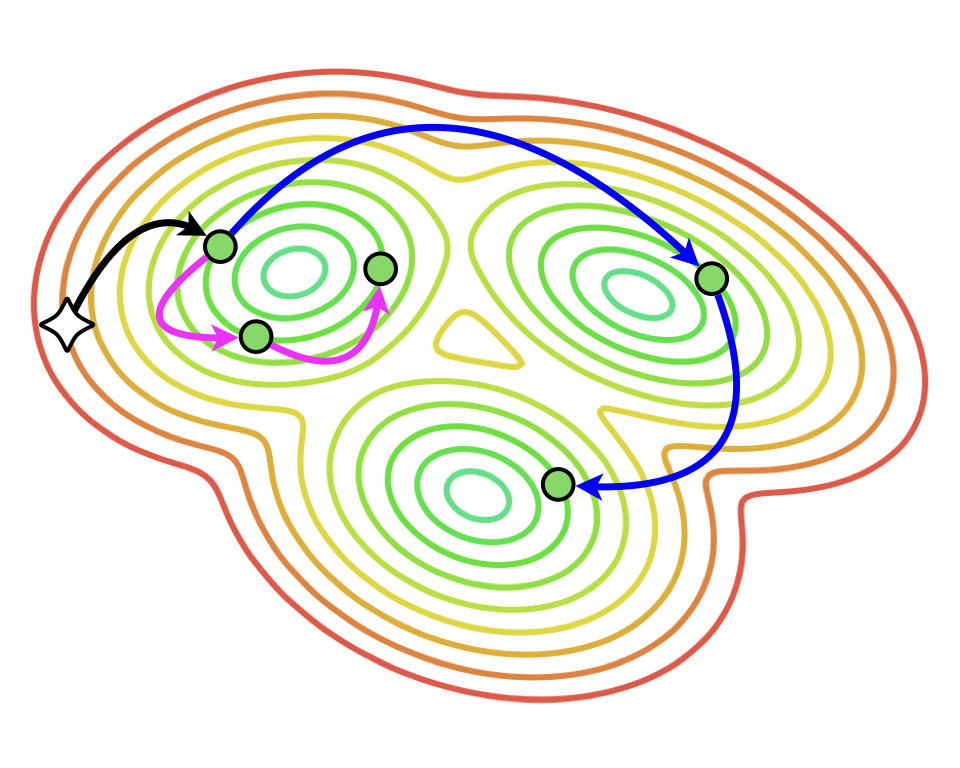

To Stay or Not to Stay in the Pre-train Basin: Insights on Ensembling in Transfer Learning

Ildus Sadrtdinov*,

Dmitrii Pozdeev*,

Dmitry Vetrov,

Ekaterina Lobacheva

Neural Information Processing Systems (NeurIPS), 2023

paper

/

openreview

/

code

/

short poster video

/

bibtex

|

|

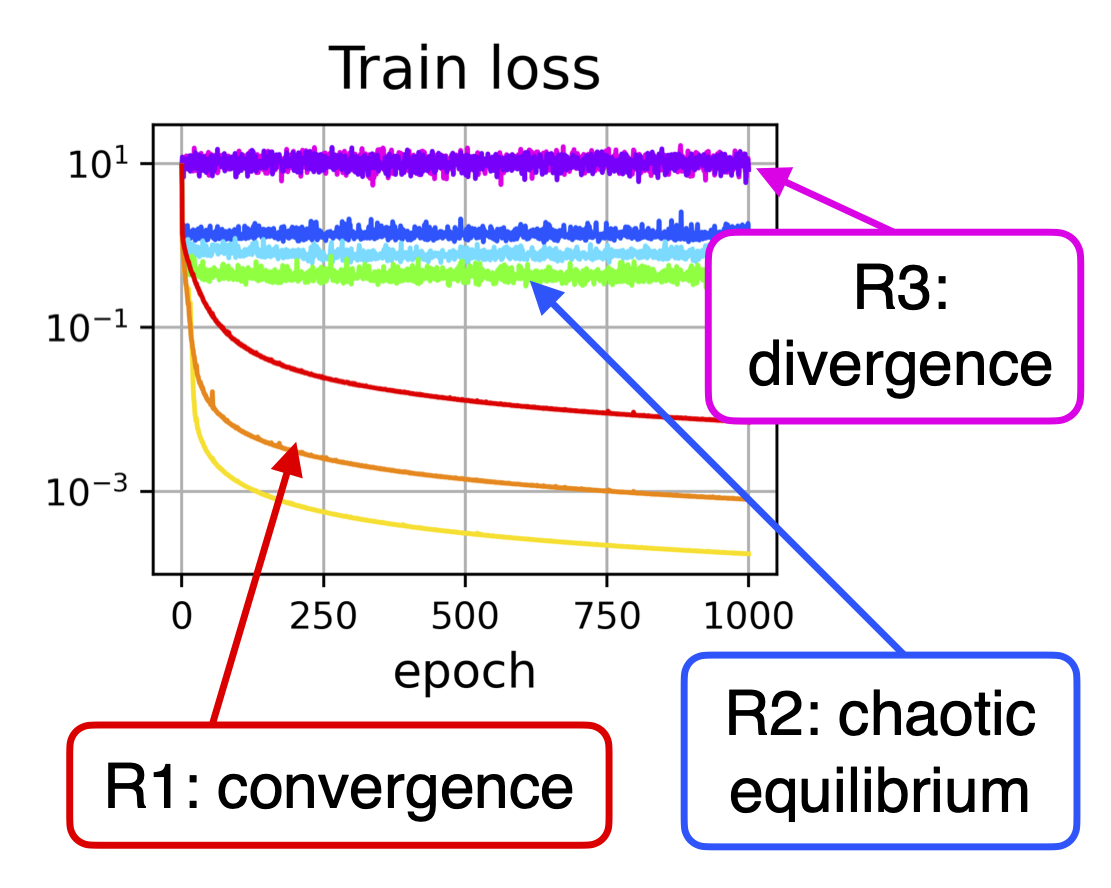

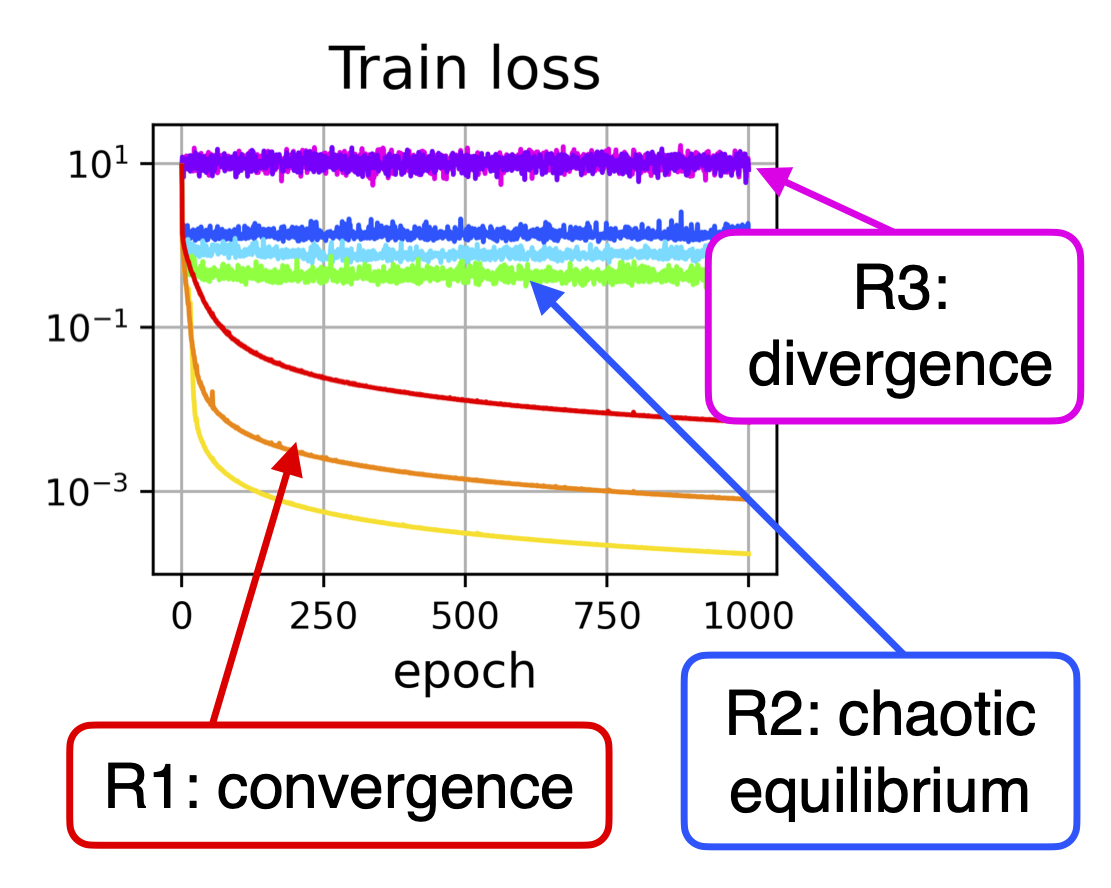

Training Scale-Invariant Neural Networks on the Sphere Can Happen in Three Regimes

Maxim Kodryan*,

Ekaterina Lobacheva*,

Maksim Nakhodnov*,

Dmitry Vetrov

Neural Information Processing Systems (NeurIPS), 2022

paper

/

openreview

/

code

/

short poster video

/

long talk (in Russian)

/

bibtex

|

|

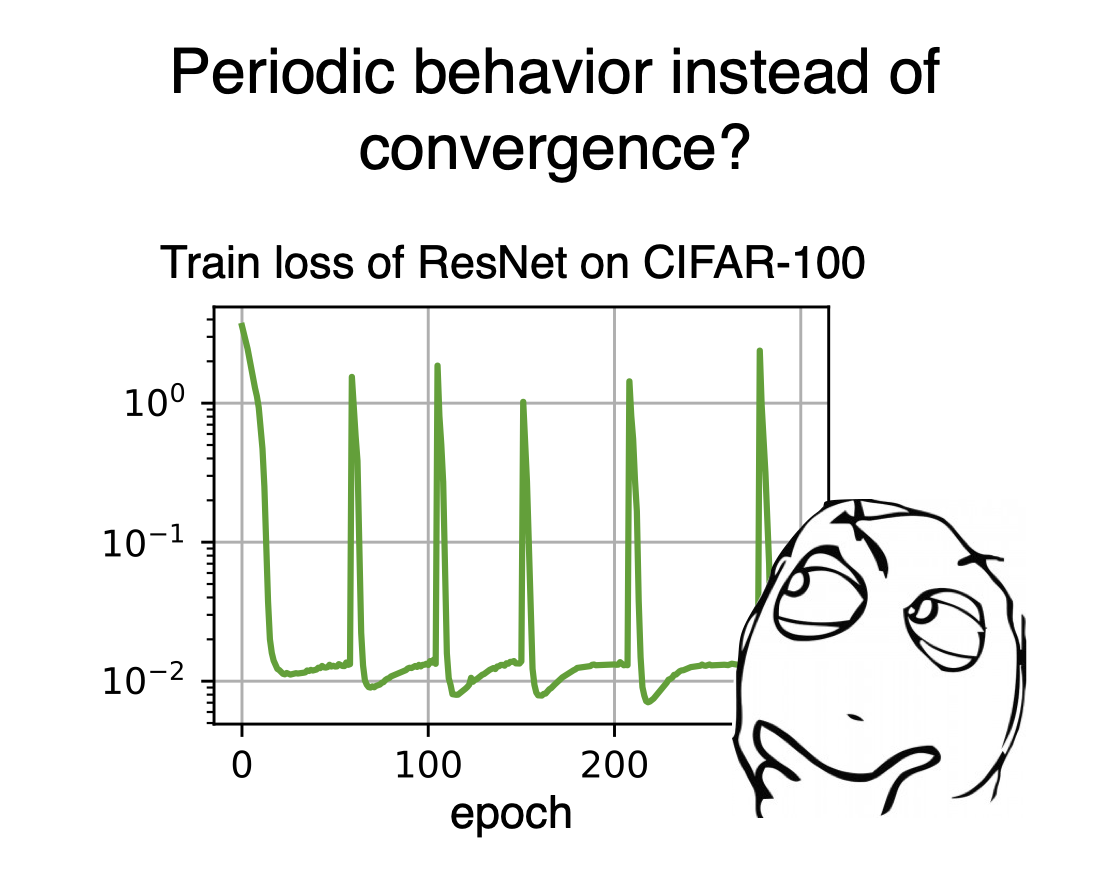

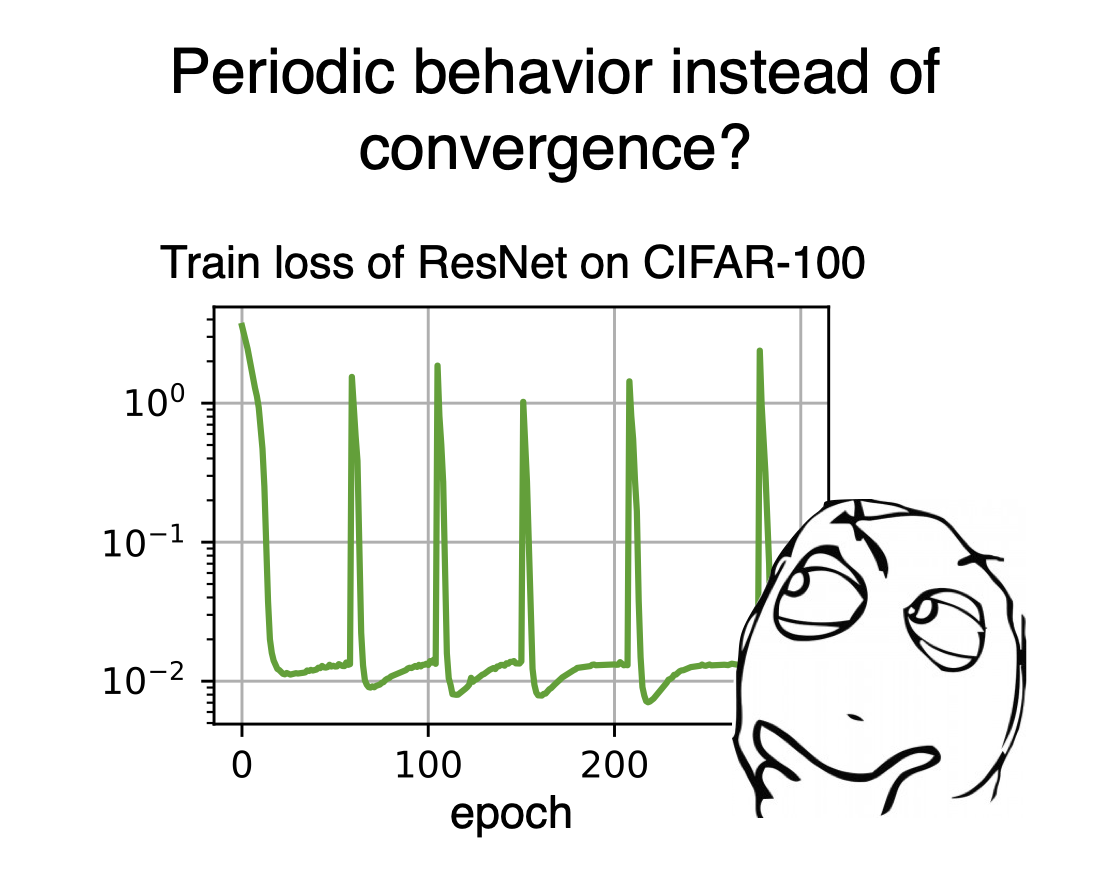

On the Periodic Behavior of Neural Network Training with Batch Normalization and Weight Decay

Ekaterina Lobacheva*,

Maxim Kodryan*,

Nadezhda Chirkova,

Andrey Malinin,

Dmitry Vetrov

Neural Information Processing Systems (NeurIPS), 2021

paper

/

openreview

/

code

/

short poster video

/

long talk

/

bibtex

|

|

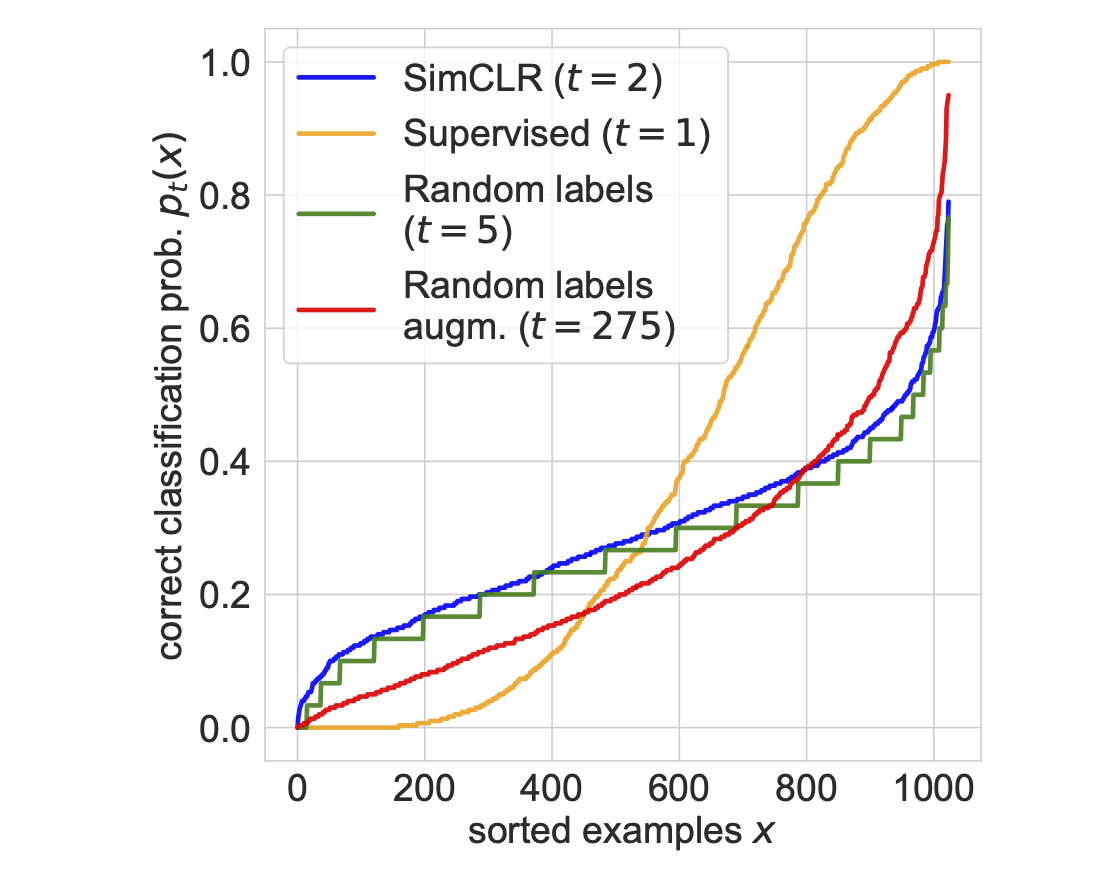

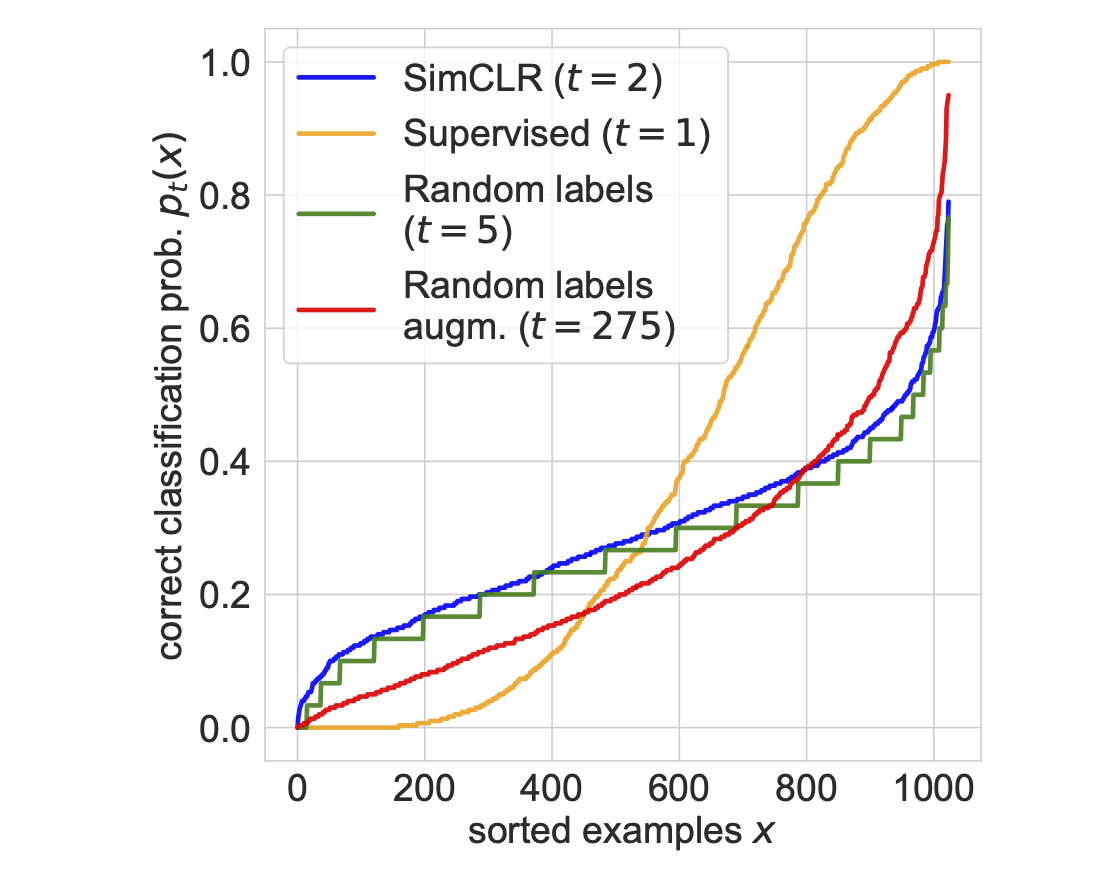

On the Memorization Properties of Contrastive Learning

Ildus Sadrtdinov,

Nadezhda Chirkova,

Ekaterina Lobacheva

Workshop on Overparameterization: Pitfalls & Opportunities at ICML, 2021

paper

/

bibtex

|

|

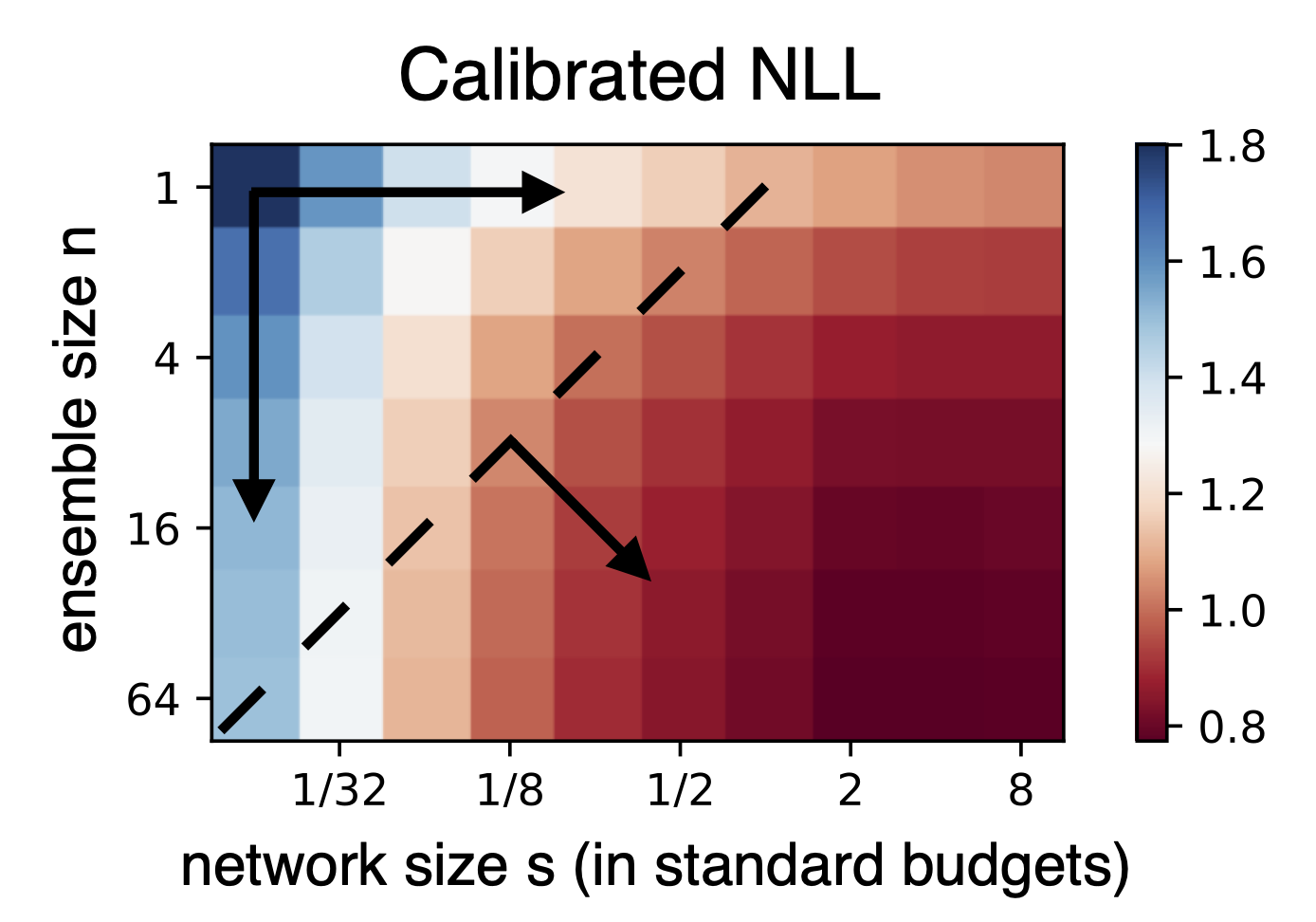

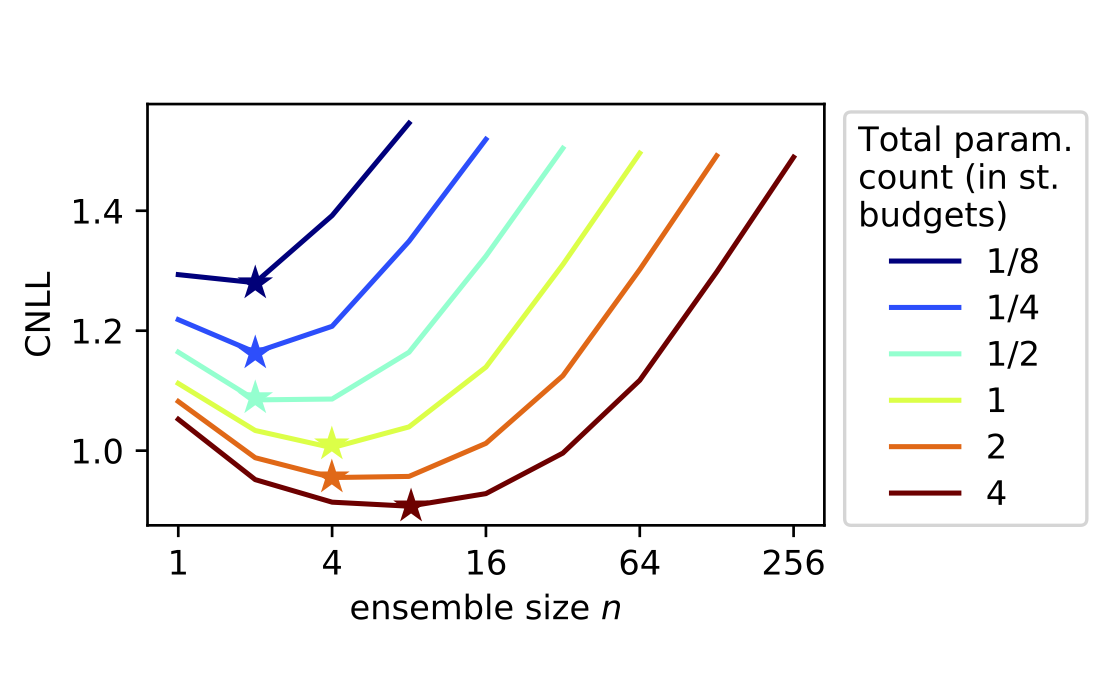

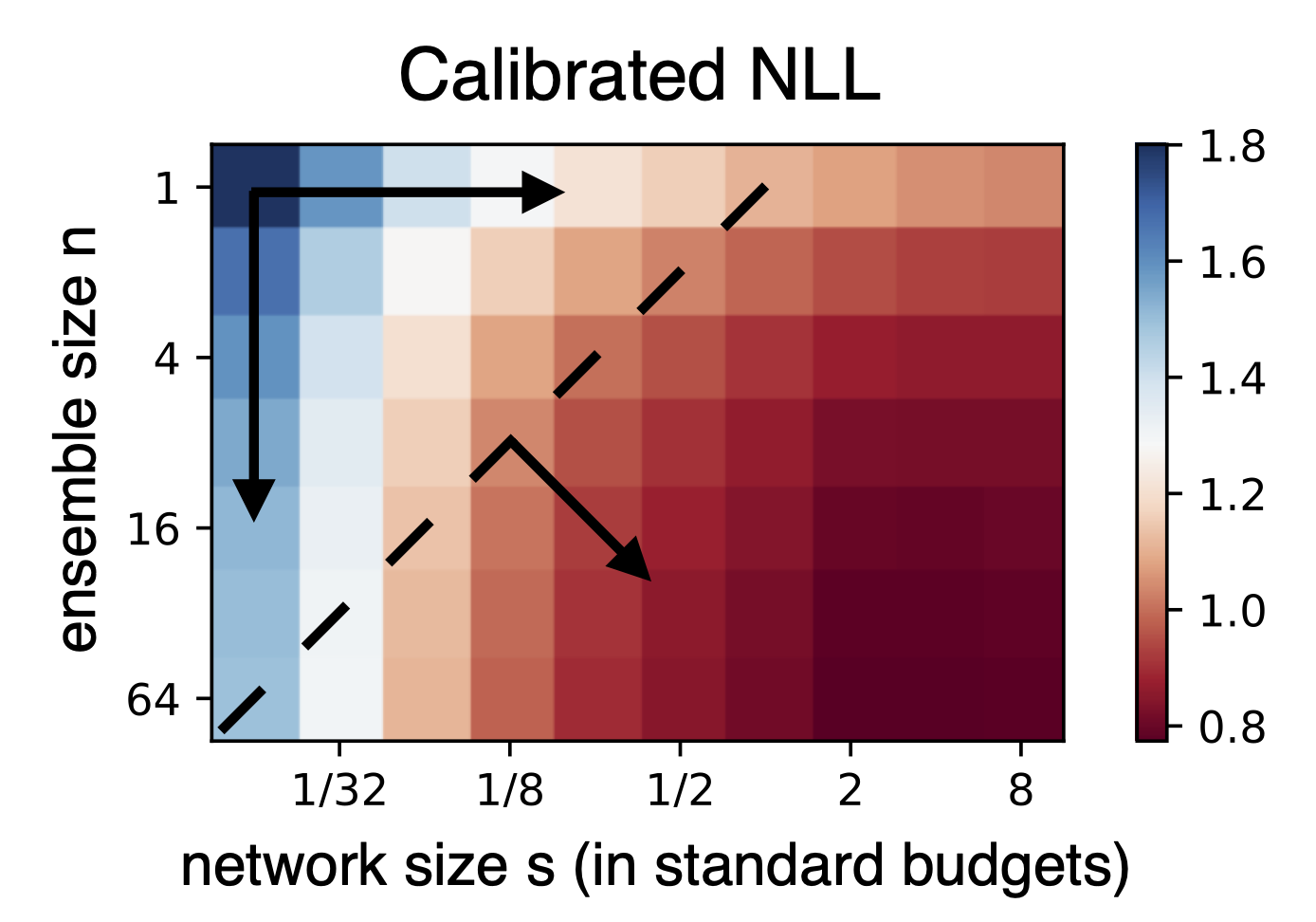

On Power Laws in Deep Ensembles

Ekaterina Lobacheva,

Nadezhda Chirkova,

Maxim Kodryan,

Dmitry Vetrov

Neural Information Processing Systems (NeurIPS), 2020 (Spotlight)

Workshop on Uncertainty and Robustness in Deep Learning at ICML, 2020

paper

/

reviews

/

code

/

short poster video

/

long talk (in Russian)

/

bibtex

|

|

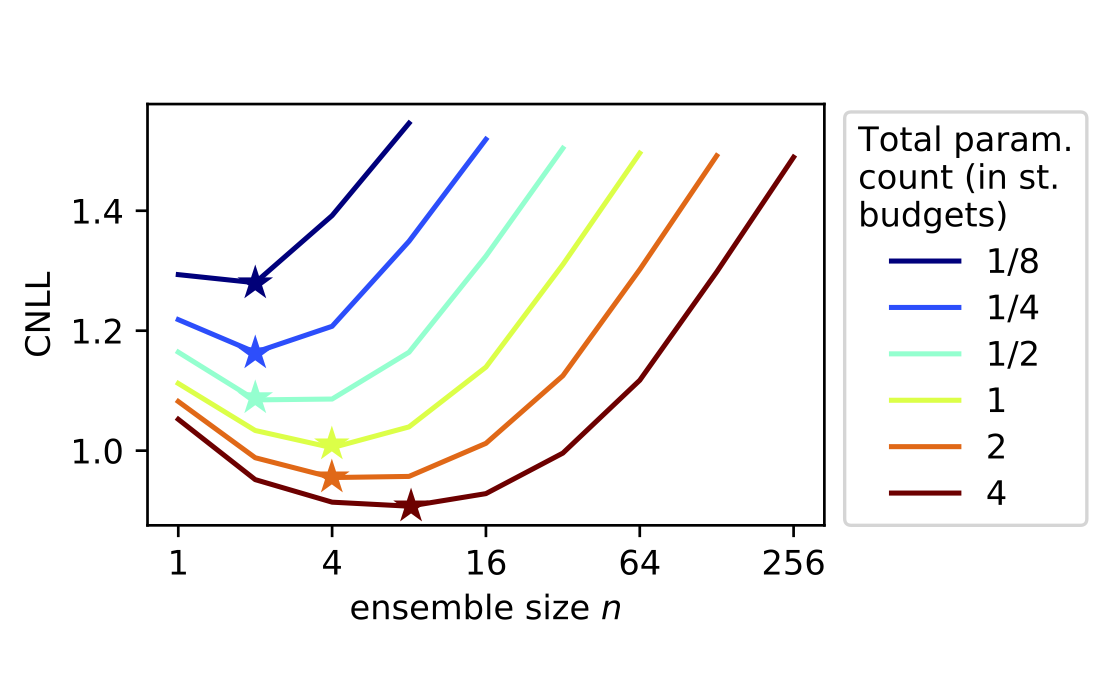

Deep Ensembles on a Fixed Memory Budget: One Wide Network or Several Thinner Ones?

Nadezhda Chirkova,

Ekaterina Lobacheva,

Dmitry Vetrov

arXiv preprint, 2020

paper

/

bibtex

|

|

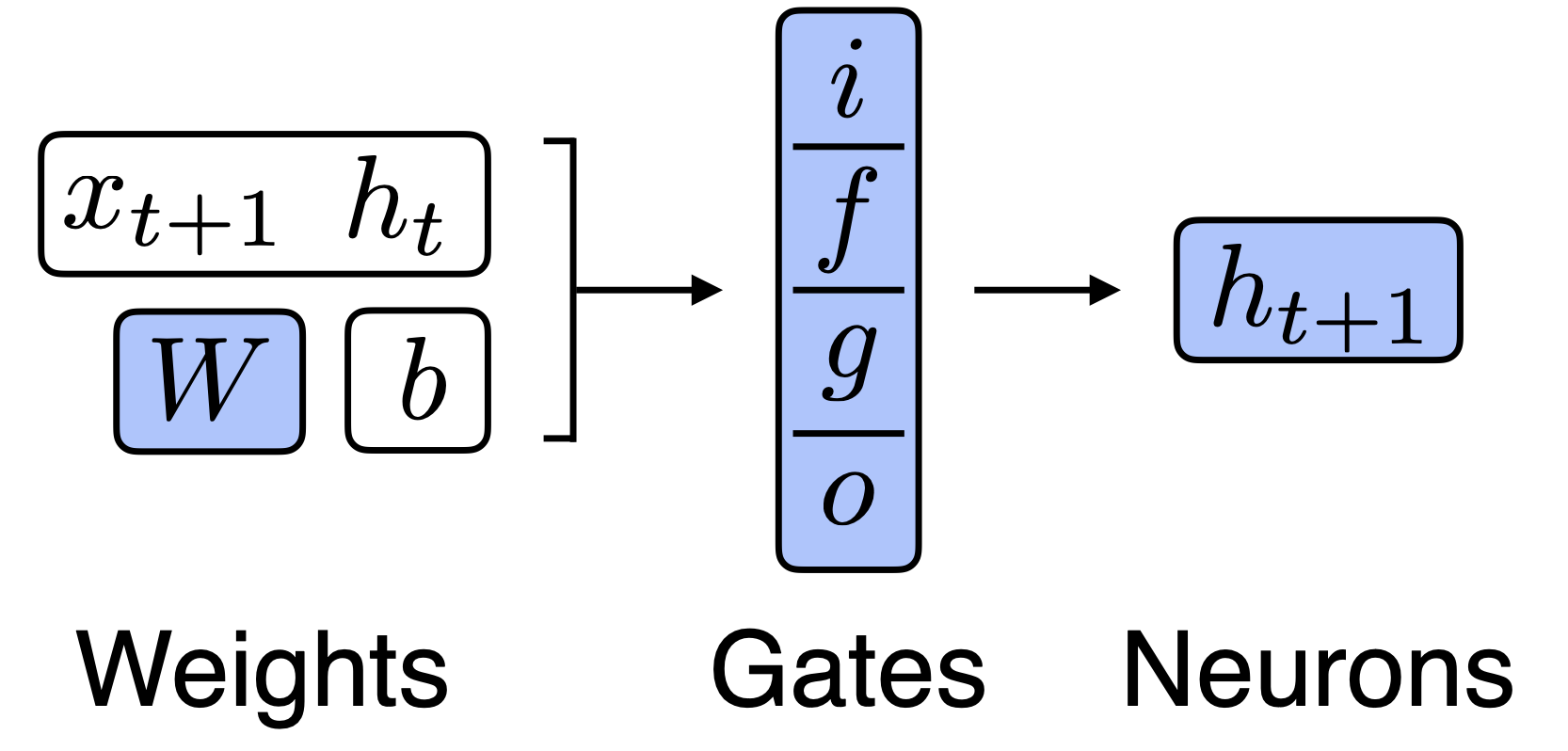

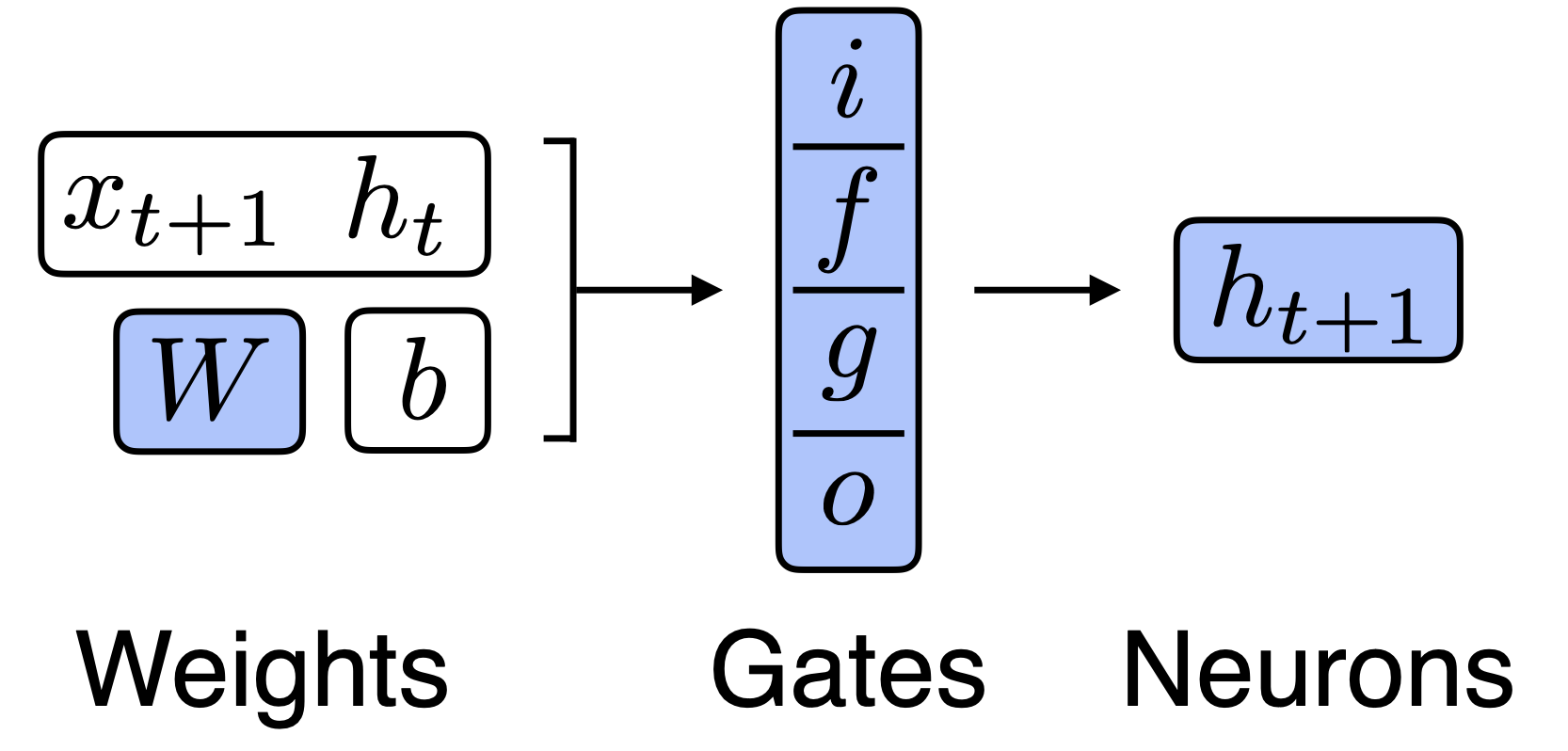

Structured Sparsification of Gated Recurrent Neural Networks

Ekaterina Lobacheva*,

Nadezhda Chirkova*,

Alexander Markovich,

Dmitry Vetrov

AAAI Conference on Artificial Intelligence, 2020 (Oral)

Workshop on Context and Compositionality in Biological and Artificial NSs at NeurIPS, 2019

Workshop on Compact Deep Neural Networks with Industrial Applications at NeurIPS, 2018

paper

/

code

/

bibtex

|

|

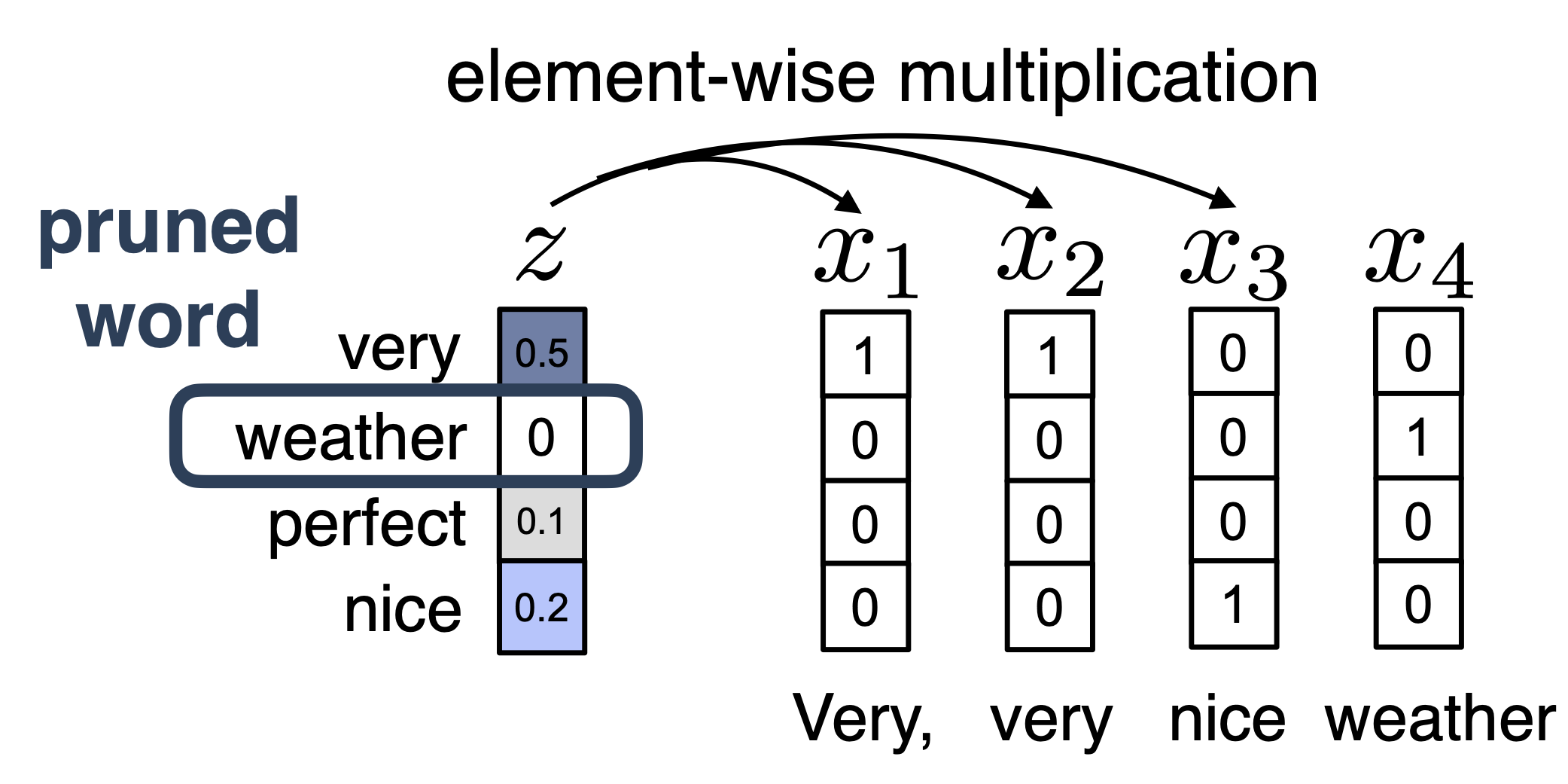

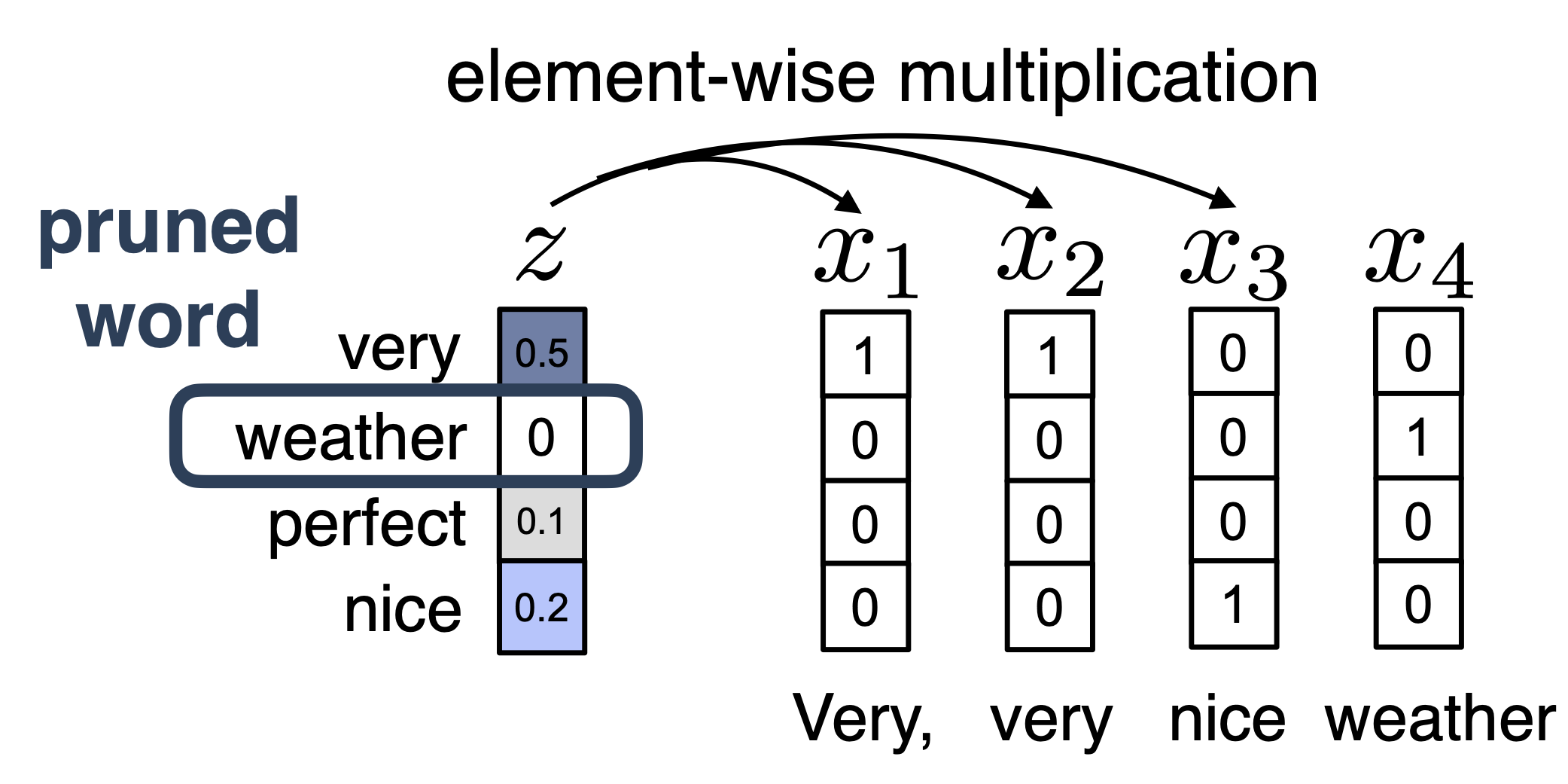

Bayesian Compression for Natural Language Processing

Nadezhda Chirkova*,

Ekaterina Lobacheva*,

Dmitry Vetrov

Conference on Empirical Methods in Natural Language Processing (EMNLP), 2018

Workshop on Learning to Generate Natural Language at ICML, 2017

paper

/

code

/

bibtex

|

|

Adaptive prediction time for sequence classification

Maksim Ryabinin,

Ekaterina Lobacheva

preprint, 2018

paper

/

openreview

/

bibtex

|

|

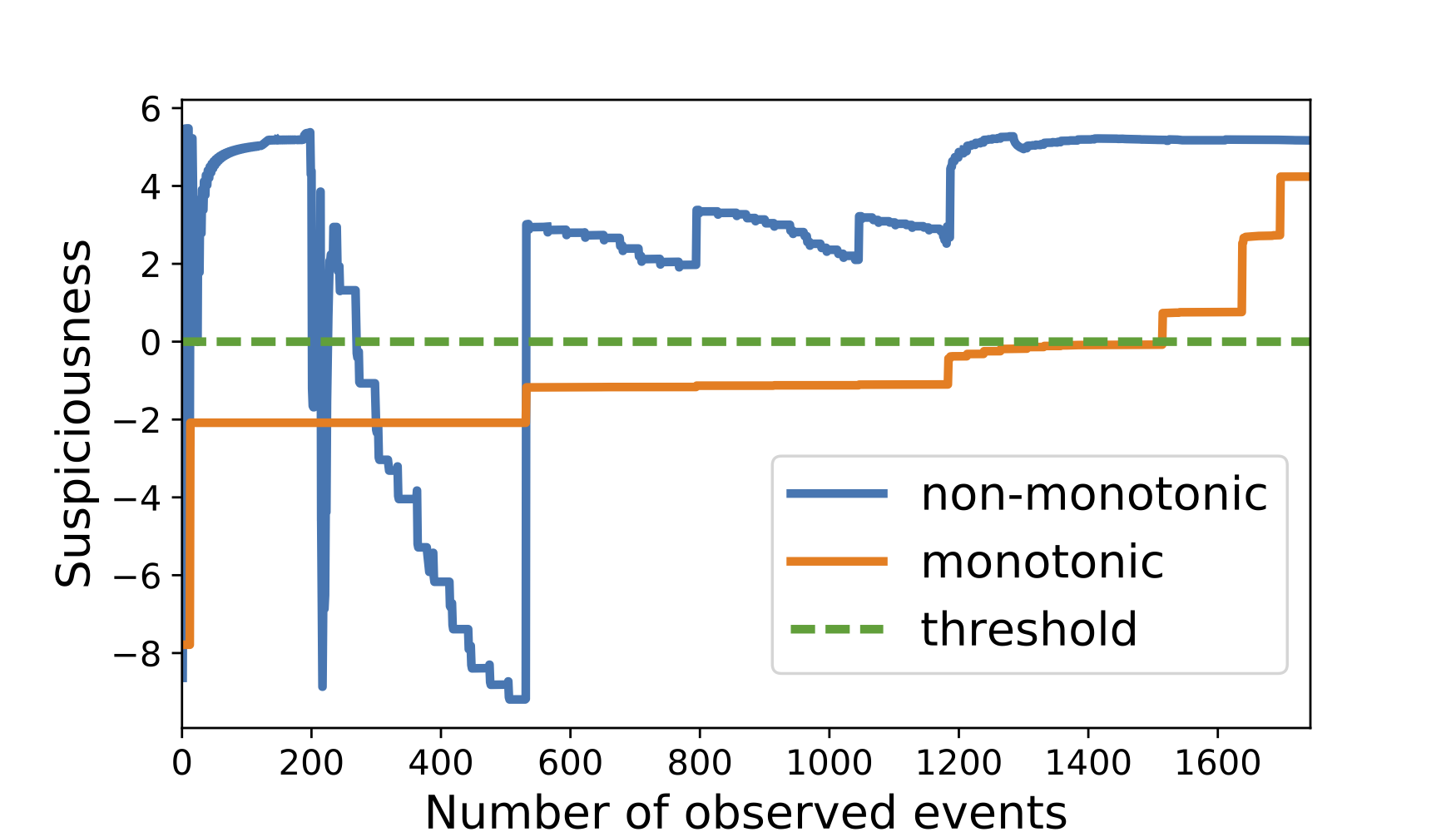

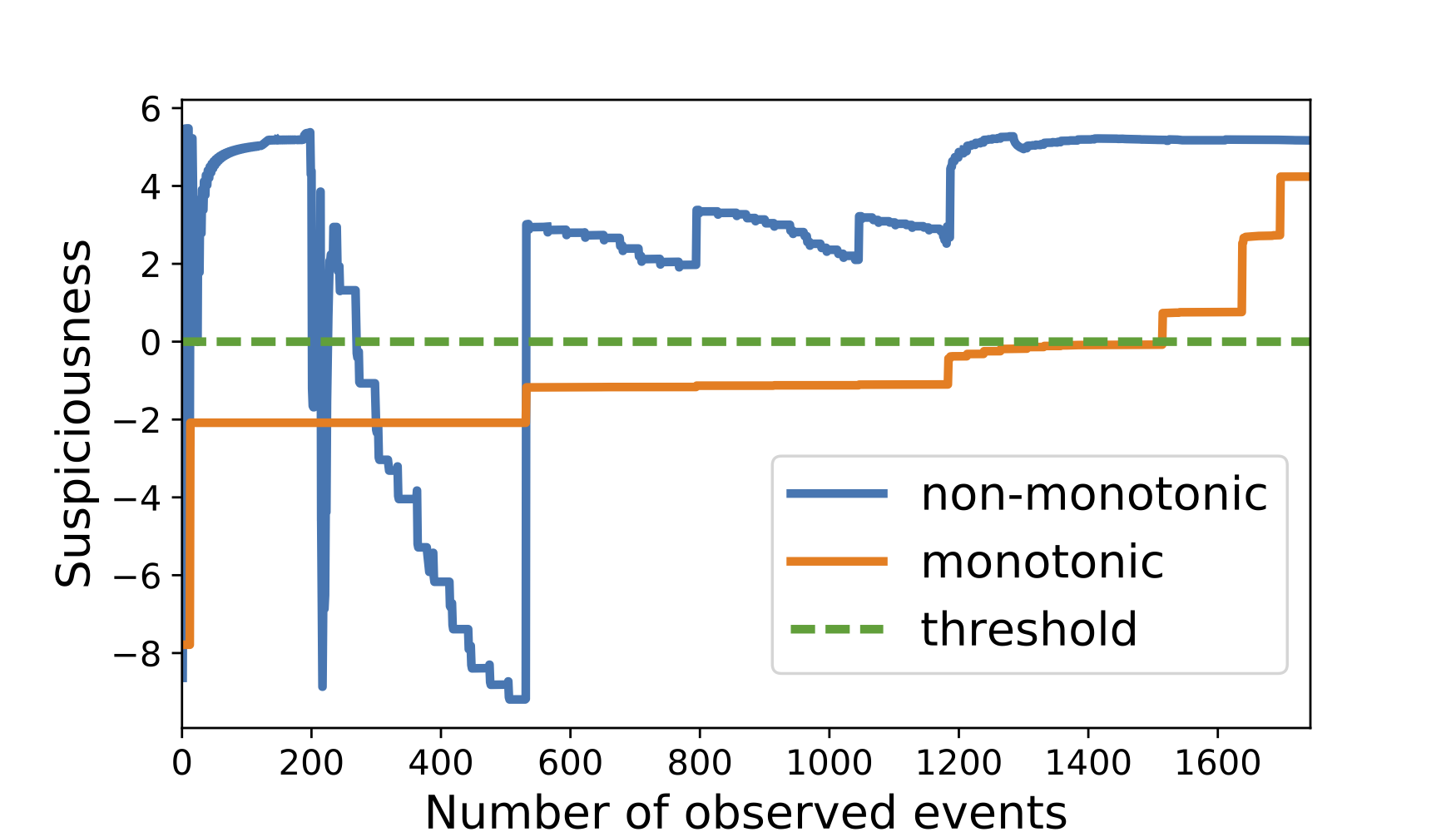

Monotonic models for real-time dynamic malware detection

Alexander Chistyakov,

Ekaterina Lobacheva,

Alexander Shevelev,

Alexey Romanenko,

ICLR Workshop, 2018

paper

/

openreview

/

bibtex

|

|

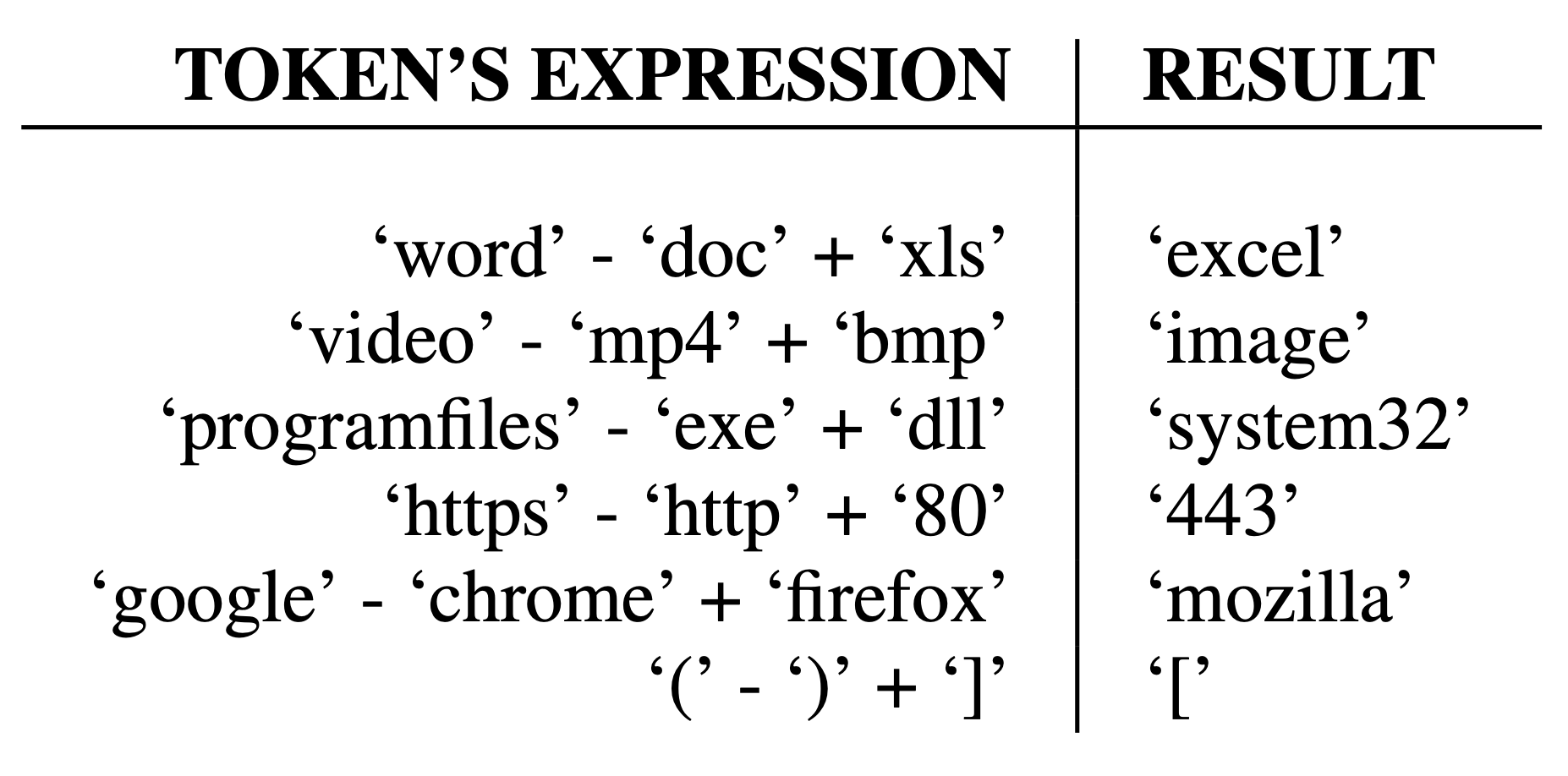

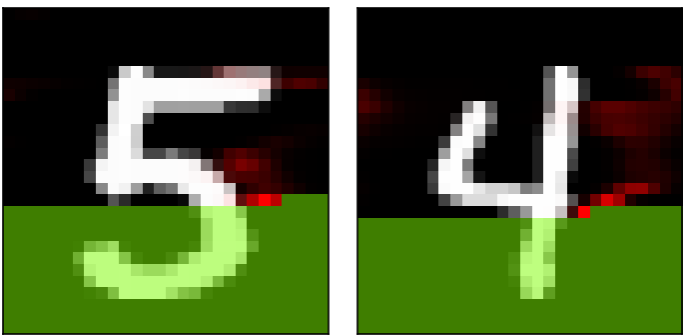

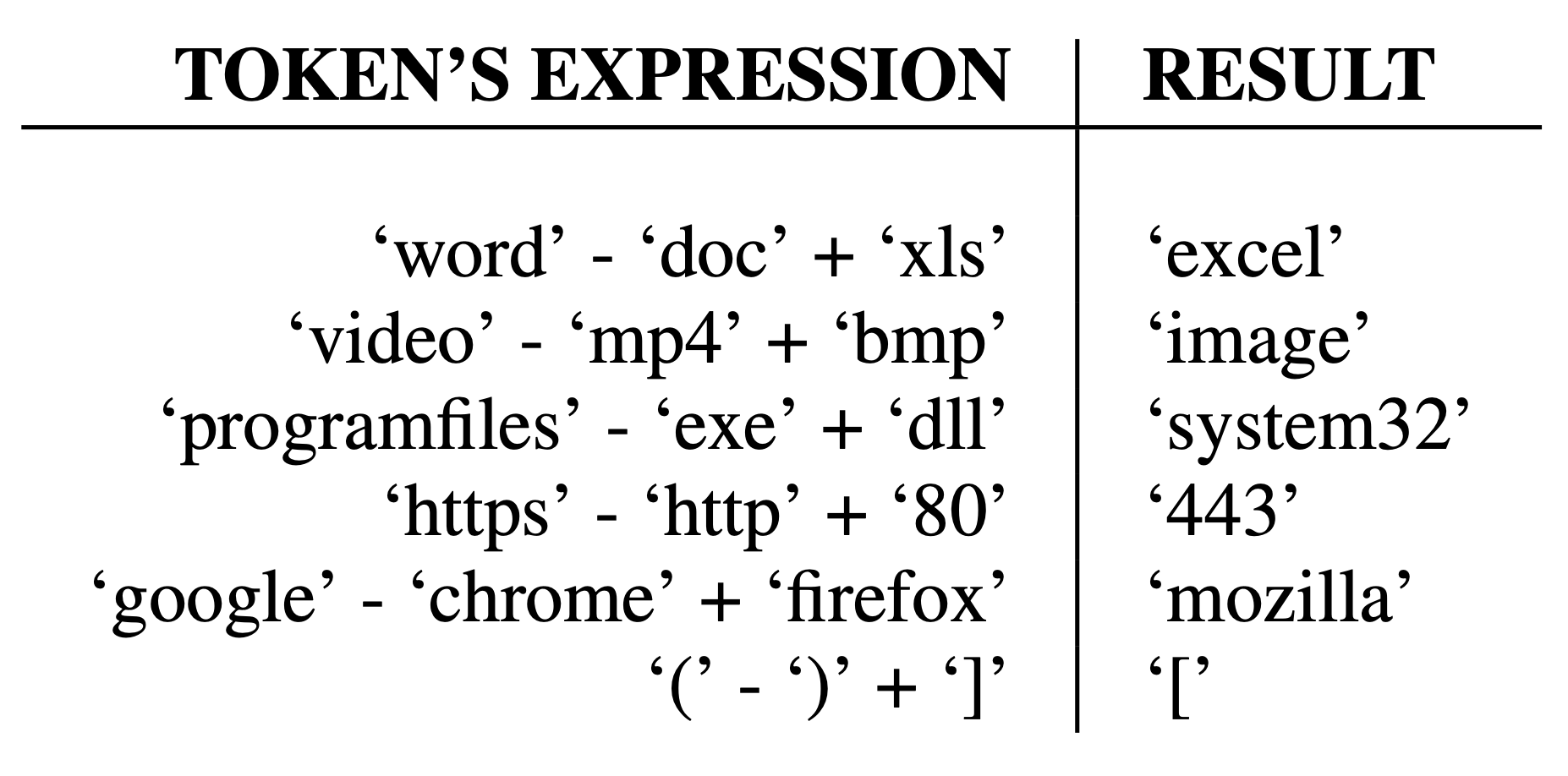

Semantic embeddings for program behavior

Alexander Chistyakov, Ekaterina Lobacheva, Arseny Kuznetsov, Alexey Romanenko

Alexander Chistyakov,

Ekaterina Lobacheva,

Arseny Kuznetsov,

Alexey Romanenko,

ICLR Workshop, 2017

paper

/

openreview

/

bibtex

|

|

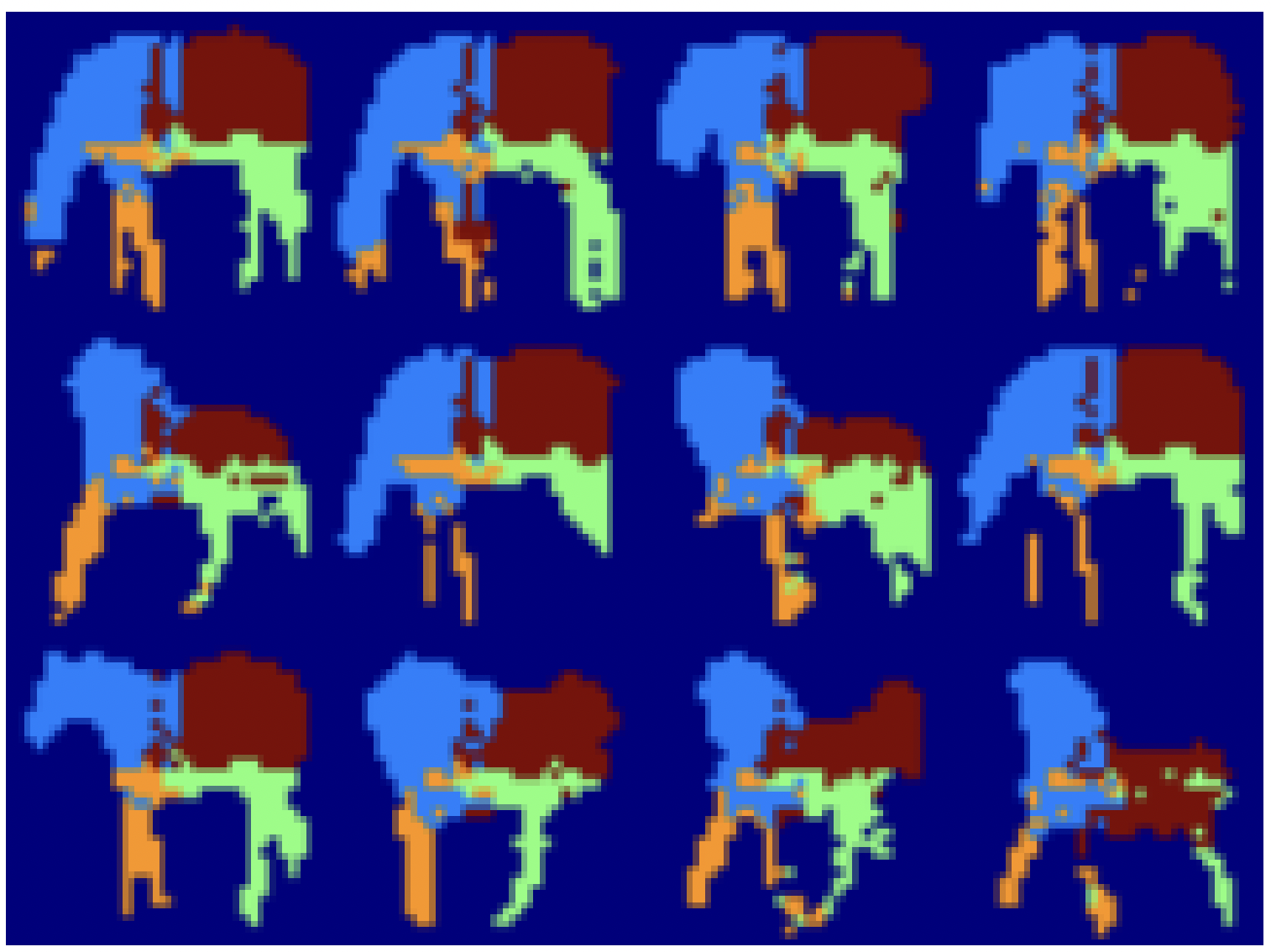

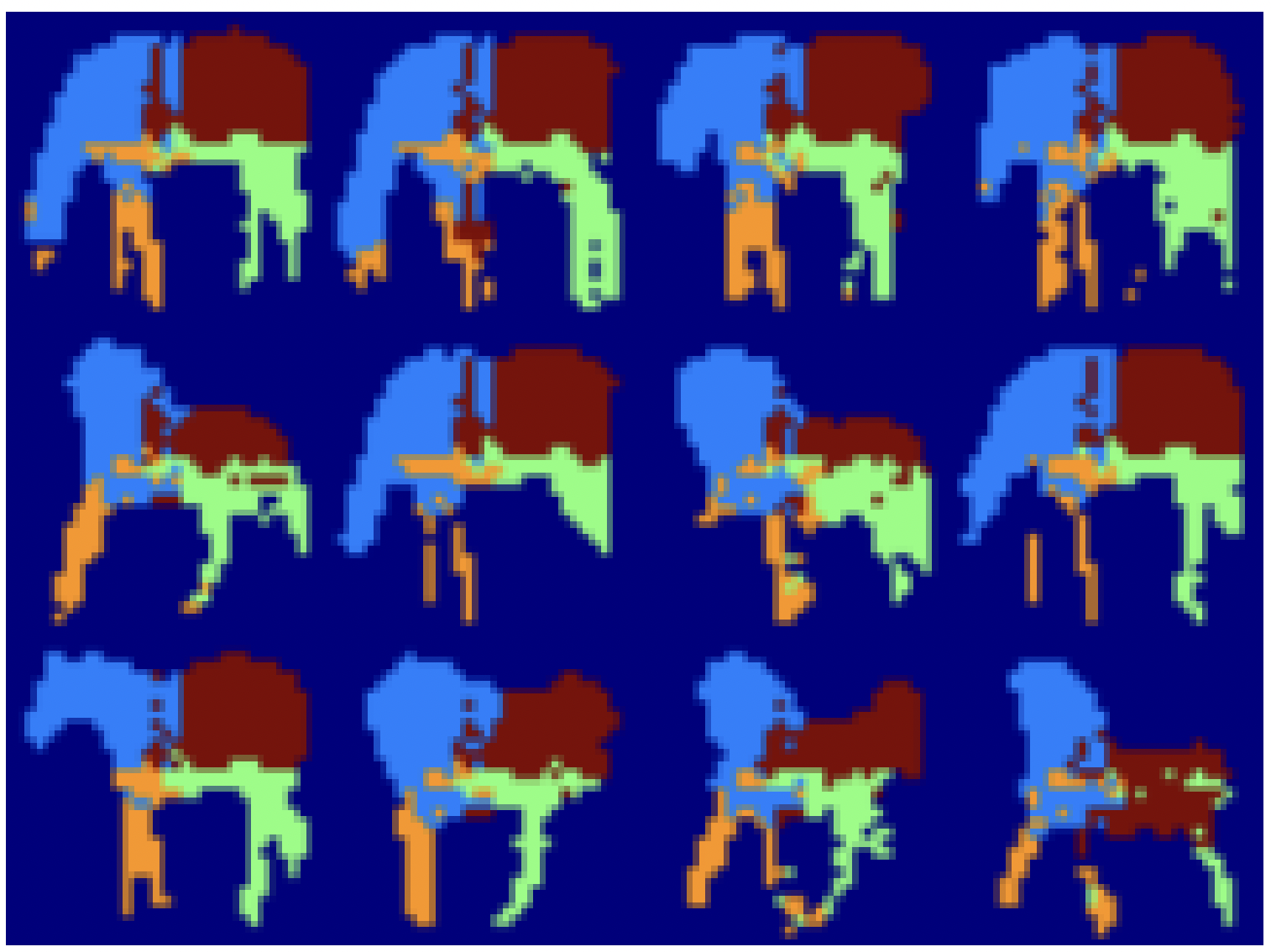

Deep Part-Based Generative Shape Model with Latent Variables

Alexander Kirillov,

Mikhail Gavrikov,

Ekaterina Lobacheva,

Anton Osokin,

Dmitry Vetrov

British Machine Vision Conference (BMVC), 2016

paper

/

bibtex

|

|

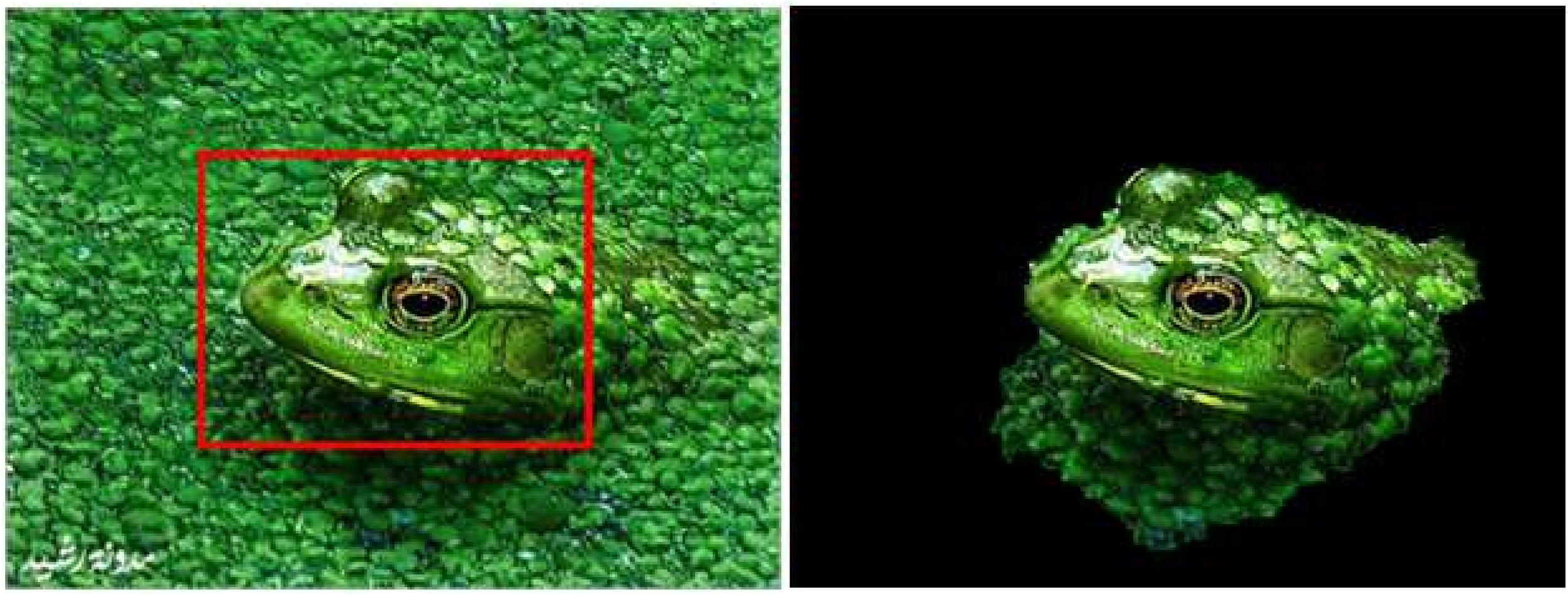

Joint Optimization of Segmentation and Color Clustering

Ekaterina Lobacheva,

Olga Veksler,

Yuri Boykov

International Conference on Computer Vision (ICCV), 2015

paper

/

bibtex

|

The webpage template was borrowed from Jon Barron.

|

|